Education and Automation: Tools for Navigating a Sea of Fake News

Newsrooms around the world have been quietly whispering about the slow death of journalism for decades, but 2016 has seen that rumbling become a roar. Spurred on in no small part by one of the most-watched and most controversial presidential races in recent U.S. history, the manufacture of perilous misinformation, distributed en masse, has bred an increasingly toxic online news environment.

The Oxford English Dictionary has named “post-truth” as its word of the year, referring to the circumstance “in which objective facts are less influential in shaping public opinion than appeals to emotion and personal belief.” That might be going too far, but there is no doubt that the internet has changed the way that news is created and consumed. Speed and spectacle are incentivized in reporting, at the cost of fact-checking and verification.

In a media economy based on clicks and views, each hit on a web page translates directly to dollars — annual digital ad spending grew to $59.6 billion in the U.S. in the past year. On the other hand, the value of responsible reporting is extremely hard to quantify. Compounding the problem, news aggregators and social media sites have further eroded the value on institutional reputation by decontextualizing news from its source. In the race to capture eyeballs, being first and flashy is better than being accurate.

These trends have caught news producers in a culture war between old-school responsible reporting and profitability, as institutions struggle to adapt to the internet. Shams, scams, and spam compete for space with legitimate articles and fakes can look shockingly like the real thing. In the traditional environment the laborious task of fact-checking was done by hand. Few people can spare the time and energy to fact-check every piece of information they come across – not even journalists. Stories that are “too good to check” are rushed off to the presses and the responsibility of verification is left to organizations like Snopes and Politifact. This is how fake news goes viral.

Compounding the problem, studies show that 44 percent of American adults now get at least some of their news from Facebook. But the quality of the news any individual social media user get often depends not just on the people sharing it, but also the proprietary, black-box algorithms working behind the scenes to determine what ends up in your newsfeed.

Recently, both Google and Facebook have issued assurances that they are working hard to stem the tide and filter fake news now streaming through their respective platforms. Since the presidential election, efforts to combat the spread of misinformation have included publishing a list of fake news sites, while a group of students created a verification browser plug-in at a hackathon. Most of these efforts have concentrated on identifying whether or not an information source is trustworthy, but the adage to “attack the problem at the source” may not prove to be the best strategy for two reasons.

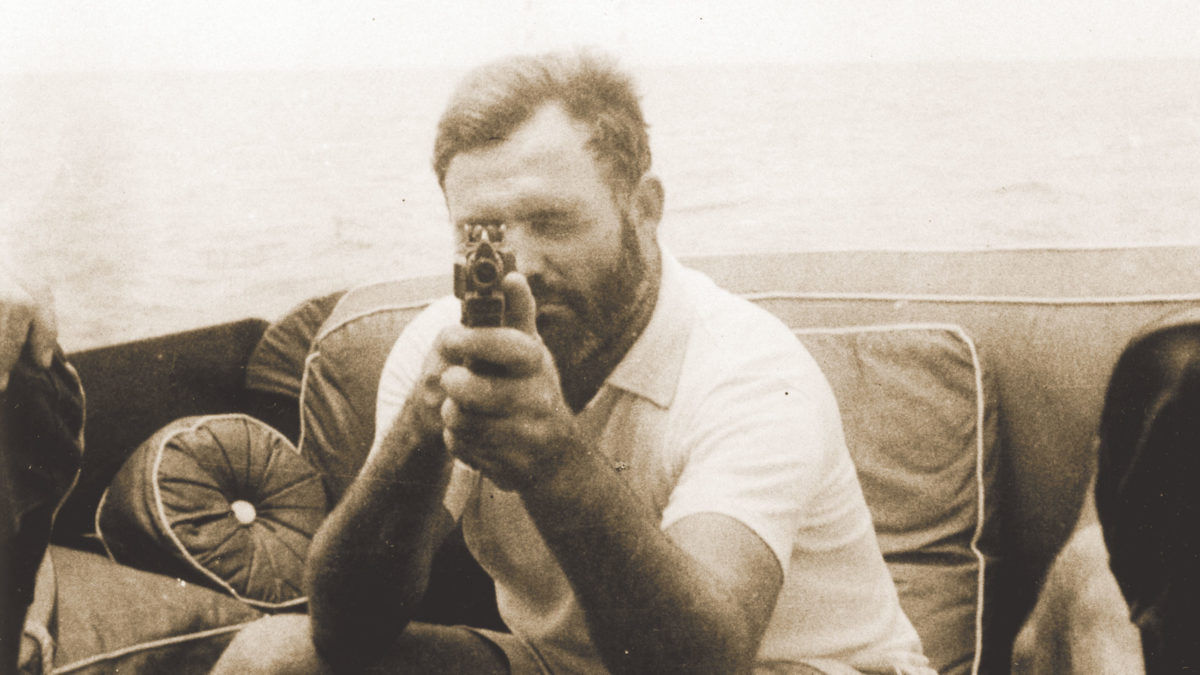

“Every man should have a built-in automatic crap detector operating inside him,” the writer Ernest Hemingway was quoted as saying in a 1965 article in The Atlantic magazine. Fifty years later, the need persists.

Visual: National Archives

First, websites peddling fake news are created every day; if one gets blacklisted, another will simply take its place. Responding to and maintaining an up-to-date list of websites becomes as onerous as a never-ending game of virtual whack-a-mole. Second, misinformation can spread with alarming speed. News is downloaded, shared and re-shared across many channels. Then, by the time you read it, the fake article has been effectively separated from its originating source, making it almost indistinguishable from actual news. Source verification is just one piece of the fake news puzzle, but content needs to be addressed as well.

In 2015, our team at the University of Western Ontario put out a series of short articles categorizing varieties of fakes and evaluating the role of text analytics. Outright fraudulent news aims to create false conclusions in the reader’s mind — often not easy to spot for a human reader. Deceptive writing, it turns out, contains subtle yet detectable language patterns that can be picked up by algorithms. Automated deception detection is an inter-disciplinary field that holds promise in deciphering truthful messages from outright lies based on linguistic patterns and machine learning. Though such methods are rarely applied to news thus far, our 2015 experiments in deception detection in news achieved 63 percent success rates. Human abilities to detect lies are notoriously poorer than that (54 percent on average).

Plus: Machines are tireless and programs are scalable. Humans, on the other hand, are not.

Automated rumor debunking is another field that attempts to identify and prevent the spread of rumors and hoaxes shared over social media, often in real-time. Content features are combined with network feature analysis looking, for instance, at users’ profiles, locations, and message propagation.

Other varieties of so-called fake news, in the style of comedians like Stephen Colbert or John Oliver, appear harmless. They take on social issues, and aim strictly to entertain — and to the extent that there ever is collateral damage, it is typically a matter of pure misunderstanding: If the joke goes unnoticed, the content is mistaken for real, and then shared ad nauseam. But based on content features like absurdity, humor, and incongruity, we were able to train an algorithm to differentiate this sort of satirical news from legitimate news, achieving 87 percent accuracy in summer 2016.

The satire-detector user interface will be provided shortly for the general public to experiment with.

“Every man should have a built-in automatic crap detector operating inside him,” Ernest Hemingway was quoted as saying in a 1965 article. “It also should have a manual drill and a crank handle in case the machine breaks down.” Fifty years later, the need is greater than ever — and maybe these and similar tools will help.

Of course, all types of text analysis software — from deception-detectors and rumor-debunkers to satire-detectors — are designed to augment our human discernment, rather than replace it, by highlighting which news may warrant more scrutiny. Critical thinking on the part of the readers remains key, and therein lies the real problem. Studies have shown that over 20 percent of adults with university degrees have low literacy proficiency, with the situation much worse in the general population. To exacerbate the issue, many of these adults were educated at a time before the internet had such a strong influence over our lives — some before the World Wide Web was even invented. Information literacy skills are key to navigating the vast, online sea of fake news.

Technologies like our satire detector can help us deal with information overload by automating a certain level of quality control, but ultimately, the most important “crap” detector is the one inside our heads.

This article has been updated to clarify the timing of a quote attributed to Ernest Hemingway that appeared in a 1965 piece published in The Atlantic magazine.”

Dr. Victoria L. Rubin is an associate professor in the Faculty of Information and Media Studies and the Director of LiT.RL research lab at the University of Western Ontario, specializing in information retrieval and natural language processing. Yimin Chen is an LIS PhD Candidate from the Faculty of Information and Media Studies at the University of Western Ontario. Niall J. Conroy is a recent PhD graduate from the Faculty of Information and Media Studies at the University of Western Ontario.