Just over 30 years ago, psychology professor Paul Slovic delivered an uncomfortable message to technology experts in the U.S. At the time, then-unfamiliar technologies like nuclear power and pesticides were just becoming a part of everyday life. People were nervous about them, and the experts sought to assuage their fears.

VIEWPOINTS

Partner content, op-eds, and Undark editorials.

But it wasn’t working. Large segments of the public voiced opposition. Left frustrated, policymakers and technical experts felt they needed a better way to convey what their statistical models told them: that the risks associated with the technologies were low and acceptable. They wanted to persuade the masses that they would be safe.

Implicit in the experts’ stance was the belief that, when it came to judging risk, they knew better than the “laypeople.” But Slovic’s seminal review made the case that people had a rich conceptualization of risk. The public’s decision-making factored in social concerns, attitudes, and emotions — not just statistics. Their judgments, he wrote, carried a complexity that wasn’t being captured by the experts’ verdicts.

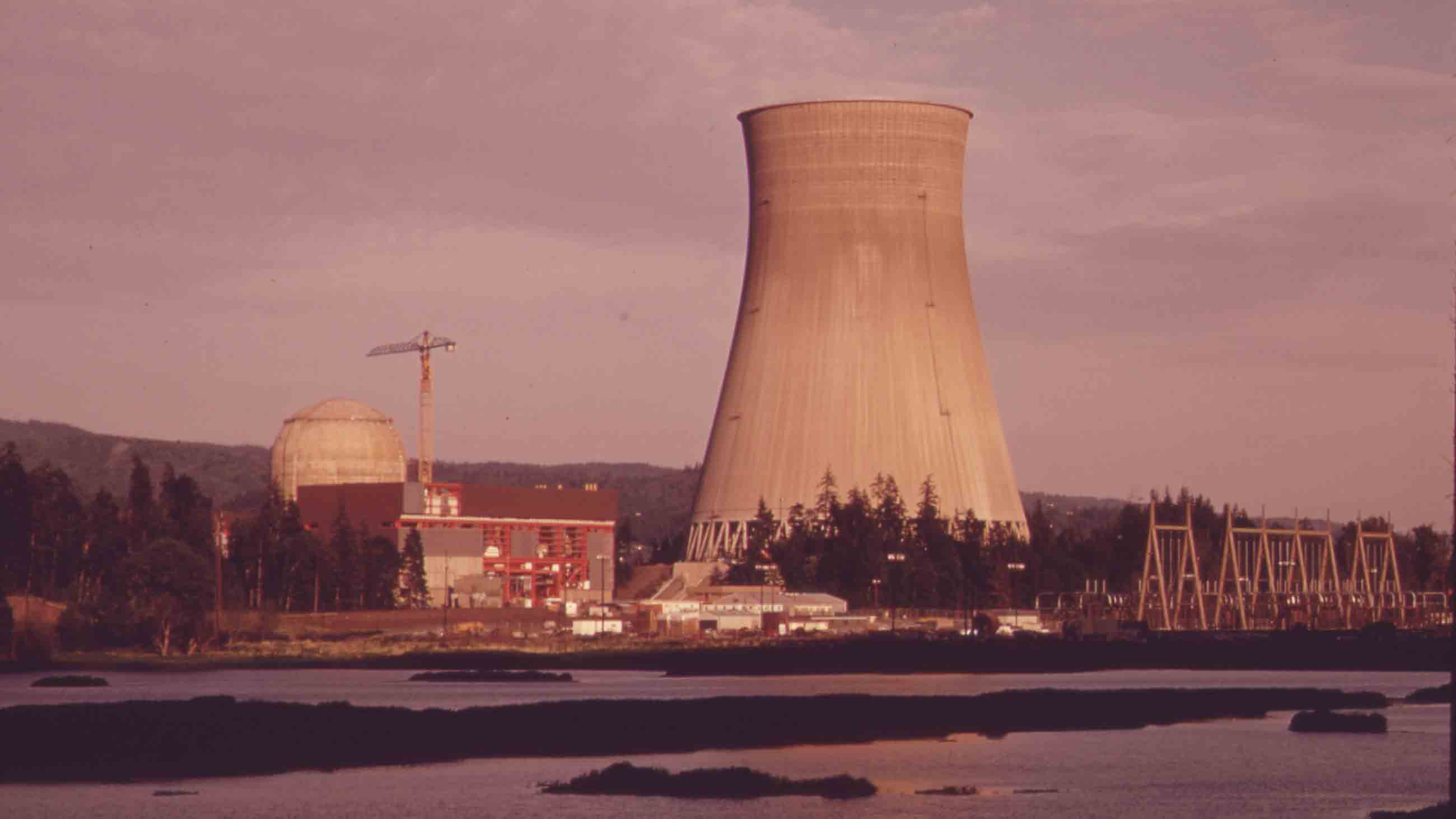

Not only did the experts fail to acknowledge that complexity, they reacted harshly to the public’s concerns, Slovic wrote. In the debate over nuclear power, a prominent issue of disagreement at the time, experts used words like “irrational,” “mistaken,” and “insane” to describe the public’s stance.

Fast-forward to today. Does any of this sound familiar?

Although today’s scientists rarely use such strong wording, they still operate largely on the conviction that people will get on board with scientific expertise if only the evidence is communicated better — an idea known as the deficit model.

But there are strong signs that, on contentious matters at least, the deficit model isn’t working. A recent Pew Research Center study found a wide gulf between the opinions of scientists and the American public on issues like GMOs and climate change. Scientific expertise has been very publicly challenged by politicians on both sides of the Atlantic in recent years. The Trump administration’s rollback of climate change policy and environmental safeguards is a sign of a public that — through its vote — has voiced a degree of doubt, if not mistrust, of expertise.

As I’ve previously written, I believe we are facing this impasse because science is becoming increasingly entangled with the messy reality of social problems. That was especially evident this summer, when researchers reported that troll social media accounts traced to Russia had been spreading misinformation about vaccines. Such misinformation campaigns have helped feed the long-term trend of skepticism that’s led to a drop in vaccination rates and a measles comeback in the U.S. and Europe.

In other words, there are forces at play beyond science. If we are to have constructive public conversations about the science and technology that affects people’s lives, we must acknowledge that fact, look beyond the politics, and figure out a way to work with what might lie beneath the surface of public skepticism.

Slovic’s work is a good place to start. Three decades ago, the psychologist reviewed studies that assessed perceptions of risk, in one case comparing experts and members of the general public by using their risk ratings to rank the perceived dangers of 30 activities and technologies, including nuclear power, surgery, pesticides, and swimming. The work revealed that public perceptions were shaped in part by psychological and cognitive factors. The risk posed by nuclear power, for instance, evoked feelings of dread and was perceived to be unknown, uncontrollable, inequitable, potentially catastrophic, and likely to affect future generations. No amount of education would easily change anyone’s mind about it.

More recent work backs up Slovic’s findings: Studies have shown that attitudes on vaccination are linked to psychological traits and tend to align with political ideology. The same is true of climate change.

Slovic’s approach to probing how people think and respond to risk focused on the mental strategies, or heuristics, people use to make sense of uncertainty. Sometimes these strategies are valid; sometimes they lead to error. But heuristics aren’t deployed exclusively by the masses, Slovic wrote. Experts use them too, and are prone to similar errors. “Perhaps the most important message from this research is that there is wisdom as well as error in public attitudes and perceptions,” he concluded.

And this is a crucial point: To respect the public’s understanding of a technological risk is not to say that the public is right, or more correct than the experts. Rather, it is to acknowledge that the public has legitimate concerns that expert assessments might miss.

Slovic’s ideas have proliferated in social science circles and influenced the communication of risk over the years. As of today, his review has been cited nearly 9,000 times. The notion of a broader conception of risk has evolved into a concept known as risk as feelings. His and similar work by people like Baruch Fischhoff and Sarah Lichtenstein informed the 1996 report “Understanding Risk,” by the National Research Council. It was later expanded into a conceptual framework known as “the social amplification of risk.” Regulators eventually came to accept that the new paradigm should be factored into risk-management decisions.

But Slovic’s underlying message seems daring, even today. Are we ready to accept that attitudes, values, and emotions like fear and desire have a legitimate role in establishing “truth”? How can we negotiate compatible roles for scientific and non-scientific considerations in decision-making?

These are tricky propositions. Acknowledging non-scientific aspects of decision-making can appear to give legitimacy to the hidden agendas of interest groups that use uncertainty to sow doubt. Although Slovic’s work doesn’t imply that the public knows better than the experts, it could be spun to that effect. A complex science narrative is often no match for a simple, emotional message that seeks to undermine it. This is a serious pitfall.

Another pitfall is that, in the absence of the authority conferred by the established process of scientific consensus, social decision-making might devolve into protracted discussions that lead nowhere. Five years ago, Mike Hulme, then a professor of climate change at the University of East Anglia, suggested that we may not actually need consensus — that we might be better off acknowledging minority views in order to make disagreements explicit, a kind of “decision by dissensus.” It’s an intriguing idea, but may be ahead of its time.

Any moves to re-examine how experts communicate with the public about socially relevant science need to be carefully considered. But we can, at least, start a conversation. Just in the past year, I’ve already seen many commentaries and discussions take place under the rubric of “post-truth.” This is encouraging.

Again, Slovic’s work can provide useful guidance for these conversations. Back in 1987, he advocated a two-way communication process between experts and the public, saying that each side has valid insights and intelligence to contribute. That view of science communication is now familiar, often taking the form of light-handed efforts to “engage” the public. But it can, and should, go beyond that. Mike Stephenson, head of science and technology at the British Geological Survey, has written about the organization’s efforts to break down barriers between government scientists and local residents at sites where decarbonization technology is being tested. Its scientists turned up in church halls and community centers, ready to listen. They abandoned familiar academic-style lecterns, projectors, and rows of chairs in favor of drop-in sessions, dialogue, and props like pictures and maps.

Such efforts are demanding. They bring scientists and the public together in an uncomfortable space, where they are forced to try to understand each other. But if we are to find socially and scientifically acceptable solutions to contentious problems, perhaps that’s what it will take.

Anita Makri is a freelance writer, editor and producer based in London. Her work has been published in the Guardian, Nature, The Lancet, and SciDev.Net, among others.

Comments are automatically closed one year after article publication. Archived comments are below.

Gee, it’s like what, Religion and Science.?

I’d trust Science more than any Religion.

Religion scares me, after all, we are constantly killing one another over a Religious beliefs, in the name of a God..

Commercialization of science is a factor in loss of credibility. If some finding helps sell a product, it’s promoted; if not it’s junk science. Very little science today is as definitive as Newtonian physics; findings are tentative. More research should be performed is a pretty standard phrase in conclusions. But maybe the public is still in the Newtonian mindset — that to be believed at all, science should provide “the answer,” and if it doesn’t, then my concept is as good as theirs.

Yes, we need dialog between scientists and “the public.” If such an ideal can be imagined, how can we form public learning group, where the learning is not confined to the technology investigated by specialists?

If you adopt a religious worldview then it becomes clear how scientists and those who crusade for their findings actually make sure that ordinary people will not listen. Assumptions of authority and the moral high ground have lead ordinary people to be suspicious and eventually to reject purely on principle.

Secular society was formed by rejecting control by an oligarchy. There has been over a century of secular development; the right to vote, to have an opinion, and to question authority.

Much resistance to science arises from the elite science bureaucracy acting very much like the old priestly bureaucracy and refusing to respect the ordinary person

Not all scientific facts exist in the same social context. I believe most well researched and replicated findings, but I do not always use those findings to decide how to behave. In particular I’m thinking about GMOs and their use in large scale industrial farming. I accept that they probably pose a small risk to me individually, but I choose not to take that risk and avoid GMO foods as much as is possible. These techniques are controlled and used by powerful economic interests. Many scientists are socially linked to these interests through grants and employment. Why do scientists get so upset when people choose to avoid GMO foods and lobby Congress to insure proper labeling? What interests do they serve?

Scientists like to say that “the plural of anecdote is not data” but for us laypeople, first- or second-hand anecdotal evidence overwhelmingly trumps scientific or statistical evidence. Serious adverse events from vaccination are rare, but if one of my kids had a seizure following vaccination, I would probably not vaccinate any of my kids after that. I would choose the free-rider path, knowing that my kids probably won’t get measles as long as YOUR kids are vaccinated. I’m not sure this is an irrational position.

It occurs to me that some, maybe many, people have a different concept of truth that is anchored to the “feeling” of truth…the kind generated through an honestly held belief in a deity or some cosmic based intelligent power. The truth science defines is limited to what our perceptions and our inventions can verify, a very different foundation. Perhaps these people find it threatening for Science to have earned so much credit through the ever building collection of provable facts. However vague their own consciousness of this might be, it might be functioning as tacit resistance to what they choose to describe as “to scientific”.

This is all very nice, but like dozens or scores of similar pieces, there is a word missing: “honesty.” Why do I never see that word, or its antonym “dishonesty,” used in this discussion? I agree that the knowledge deficit model is not working. But why should the honest scientific community be afraid of standing proudly on the moral ground of fact and calling out as immoral the dishonest denial of reality?

I would never label as dishonest a spiritual or philosophical belief. However, when discussing physical realities, I frequently denounce what I call “The Dishonesty of Belief.”

This is a moral issue because one cannot claim to be a moral person if one is dishonest about overwhelming evidence.

And I offer the reminder for these discussions that “belief” is a contronym which must be used with care. “Belief” is appropriately used to mean “faith,” which requires no evidence, but it can also be used to denote acceptance of something as fact because of valid evidence. Failure to recognize that dual meaning of “belief” often results in people talking past each other when discussing this issue.

I have a lot of experience engaging with science-denying creationists. Attitude is important; I can hardly expect them to be willing to learn from me unless I make it clear that I am ready to learn from *them*. And learn from them I have, being forced to sharpen my own logic and, on occasion, being told about relevant facts, which must be incorporated into a deeper understanding.

But one thing I have also learnt; to be very suspicious when anyone claims that “plenty of scientists” support this or that view; I have heard this said too often in support of creationism, as well as, repeatedly, climate change denial, and always ask who, and on what grounds.

Really? https://pubs.acs.org/doi/abs/10.1021/es3051197?source=cen

I am a firm believer in not generating waste if I can’t somehow recycle it.

Thank you for this important article. As a scientist, I regularly struggle to communicate the importance of my work with animal rights activists. Your article, highlighting Slovic’s work, underscores the need for more storytelling — a la StoryCollider (https://www.storycollider.org/) –by scientists, in exactly the kinds of settings that you describe at the end of your article. This kind of work by scientists will in fact “bring scientists and the public together in an uncomfortable space, where they are forced to try to understand each other.” It also speaks to Lisa Blair’s comment above that the conversations are too scientific.

Nuclear power is not equivalent to vaccinations. Chernobyl, Three Mile Islabd, Fukuyama. Plenty of scientists are opposed to it because if the massive downside

Interesting. I’ve long felt that the general public is not interested in science because the conversations are often too scientific if you will. Our society operates under social norms, e.g. how does that impact me personally, my community, my state, my nation, and the world-at-large, though there is less concern about the world-at-large.