The consequences of irreproducible research are real. When patients with amyotrophic lateral sclerosis, or ALS, enroll in a clinical trial, it is their single chance of getting better: There is no cure for the disease, and the two drugs approved to treat it are only marginally effective. About a dozen drugs that have shown promising effects in mice have little or no effect in humans.

BOOK REVIEW — “Rigor Mortis: How Sloppy Science Creates Worthless Cures, Crushes Hope, and Wastes Billions,” by Richard Harris (Basic Books, 288 pages).

But why did those drugs inspire so much hope in the first place? The culprits were optimistic bias and analytic freedom. When researchers at the ALS Therapy Development Institute went back and redid the mouse studies on those same drugs — using more animals and better controls — every single drug failed to perform. In other words, they should never have even been tested in people.

In his new book “Rigor Mortis,” the NPR science reporter Richard Harris dissects how the modern practice of biomedicine encourages unreliable results. His motley collection of anecdotes is readable, enlightening — and ultimately inspiring for its profiles of the diverse obsessives striving for reform. Those planning a future in biomedical research should read the book; so should patients hoping for better therapies, and anyone who enjoys the science section in newspapers.

Alarm over irreproducible research splashed into the mainstream in 2012 with a Nature article in which C. Glenn Begley, former vice president of hematology and oncology research at Amgen, revealed that his company could confirm the scientific findings of only six of 53 landmark papers it had investigated in pursuit of new ways to treat the disease. It wasn’t the first time people had found swaths of papers unreliable. The fact that reams of bold new findings didn’t hold up was already an open secret among venture capitalists and industrial scientists, some of whom had written about the problems. But Begley’s publication, co-authored with a fellow cancer researcher, Lee M. Ellis, forced the issue into the limelight. Since then, the National Institutes of Health has announced plans to improve reproducibility and dozens of journals have demanded that researchers report more details of experiments.

The fight to improve reproducibility has many shifting fronts and is at constant risk of damage from friendly fire. The most successful battles have established a familiar pattern. A new technology —genome-wide analysis of genetic variants, say, or inventories of protein concentrations in biological fluids — promises to answer important questions about how a disease works. Scientists flock to the field, a slew of invalidating artifacts are uncovered, past work is revealed as unreliable, scientists set standards, reset expectations, and for the most part advance more wisely. One researcher tells Harris triumphantly that genomics has gone “from an unreliable field to a highly reliable field,” at least for those researchers savvy enough to follow the most current techniques.

There are broader problems, though. Science is hard under the best of circumstances, but the conventions of academic research make it even harder. Perverse incentives cause scientists to seek support for a hypothesis and avoid experiments that might disprove it. In this way, the scientific literature fills with “intellectual land mines” (as Harris puts it) that explode years of work when other scientists build on them without testing the ground first. Meanwhile, those who recognize disappointing results first doubt their experimental technique and then fear that rather than being thanked for speaking out, they will be accused of incompetence. Meanwhile, scientists charge down blind alleys, in part because they are reluctant to change direction and in part because warnings are hushed.

Henry Bourne, a scientist at the University of California, San Francisco, tells Harris that much unreliability in biomedicine comes from an imbalance of “ambition and delight.” Delight is curiosity — the drive to know whether a technique really works or that a result is really right. That drive can counter the myriad ways in which science can be wrong. But it is blunted by researchers’ need to show that they are productive, a requirement to get funding. And that means publishing in high-visibility journals, a powerful incentive to make big claims and show unambiguous results. With funding rates for U.S. biomedical researchers at historical lows, researchers don’t dare do the analysis or experiments that will certainly slow them down and might even cloud their conclusions.

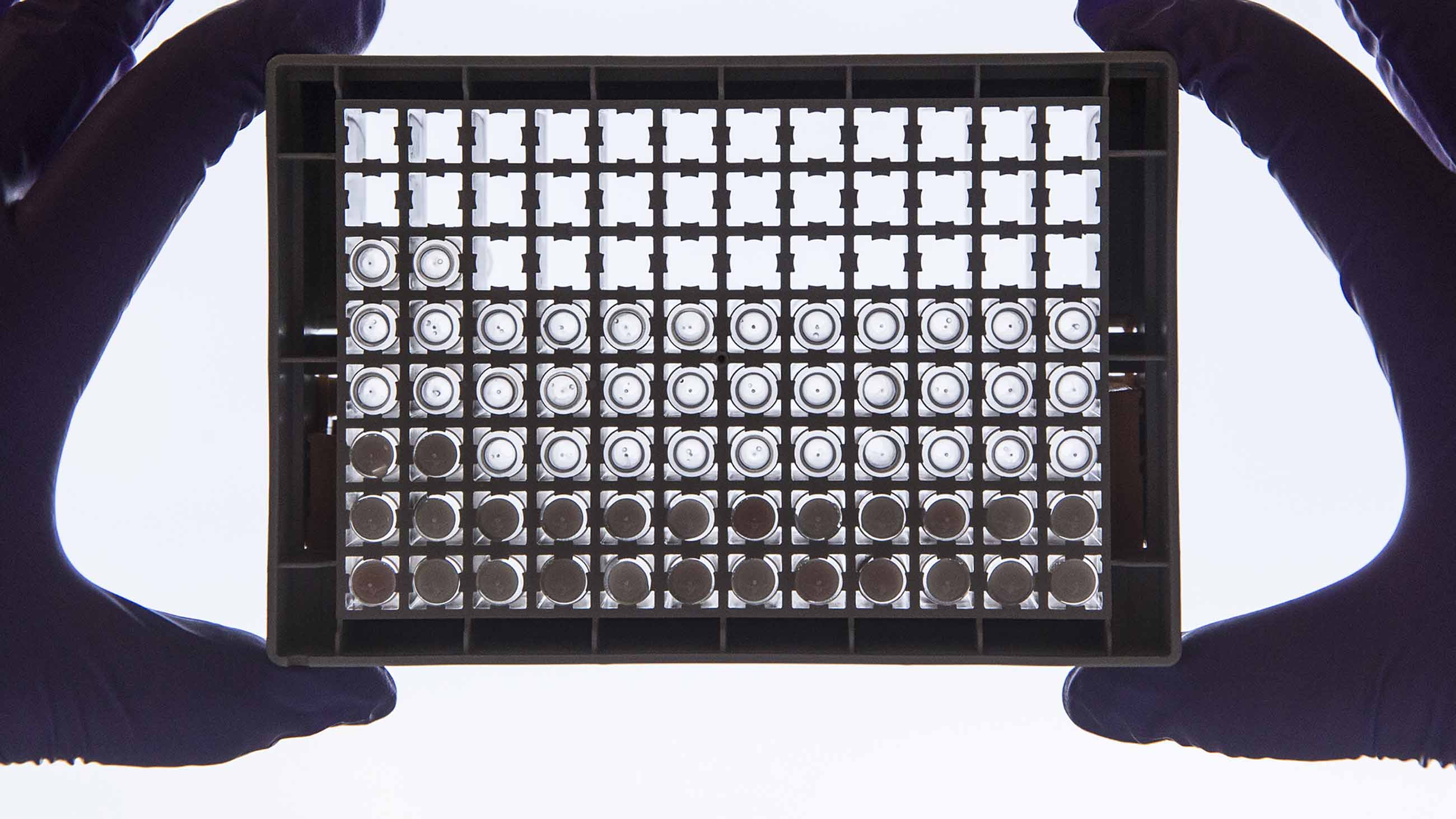

Harris profiles the bioinformatician Keith Baggerly’s quest to track down the reasons for spurious results in a blood-protein test that was supposed to diagnose ovarian cancer quickly and easily. Baggerly realized that the original work was not distinguishing patients based on whether they had cancer but on the day on which their samples were run through a highly sophisticated machine. “Batch effects,” such as running all the samples from cancer patients on one day and all samples from healthy patients on another, are now a problem most scientists try to avoid. But this is a Red Queen situation, in which scientists must run faster and faster just to stay in place. “In biology today, researchers are inevitably trying to tease out a faint message from the cacophony of data, so the tests themselves must be tuned to pick up tiny changes,” Harris writes. Modern methods have gotten really good at detecting false signals, and scientific journals are quicker to publish exciting but false conclusions than dissents. Baggerly’s findings ended up being published in The New York Times after his submission to a medical journal was rebuffed.

People like Brian Nosek, co-founder and executive director of the Center for Open Science, are trying to shift incentives so that being the first to publish flashy results matters less and publishing solid, transparent results matters more. He promotes conceptually simple solutions like encouraging researchers to share their full data and methods for others to inspect.

Even when studies are done rigorously, there’s no guarantee they can be used to predict that a drug will work. Mice and humans are different. However, once researchers start performing experiments on a particular animal or with a particular type of assay, they tend to keep using that technique and avoid asking whether it’s the right way to ask their questions. People who have based their career on such experiments don’t want to change — or consider that their past work is bunk.

News accounts of biomedical discoveries often imply that cures are right around the corner, Harris writes, and “we live in a world with an awful lot of corners.” Many are built by how scientists’ efforts are rewarded: Too often it is more important for scientists publishing on a hot topic to be first than to be right. By singling out and profiling the individuals working to set the path and the scientific record straight, “Rigor Mortis” is a valuable corrective.

Monya Baker is an editor and journalist at Nature.