The prevalence of self-deception is truly staggering — and long studied. In regard to our personal health, for example, most people in one 1995 analysis believed they live a healthier lifestyle and have a longer lifespan than their peers. In an even earlier study, around 90 percent of people in the U.S. believed they were better-than-average drivers. In social skills, 70 percent of high school students considered themselves above average in leadership, and on their ability to get along with others, 25 percent blatantly put themselves in the top 1 percent, according to data collected by the College Board in 1976.

The accompanying article is excerpted and adapted from “The Liars of Nature and the Nature of Liars: Cheating and Deception in the Living World,” by Lixing Sun (Princeton University Press, 288 pages.)

Likewise, people tend to exaggerate their popularity and inflate the number of their friends. In academic and job performance, 87 percent of Stanford MBA students rated themselves better than their average peers, and over 90 percent of faculty members placed themselves in the top half in teaching ability in a 1977 survey. The same can be said for lawyers who think they can win a case or for stock traders who consider themselves to be the best in the business.

Self-deception often compels us to attribute successes to our own effort, skills, or intelligence, but we tend to excuse our failures as due to external causes or problems on the part of others. “Mistakes are made,” we may claim when things don’t go well for us, instead of stating the simple fact, “we’re wrong,” or “we’ve flunked.” Even when there is nobody to lay blame on, we may still look for a scapegoat: We split ourselves into past and present personalities, then claim that our past self didn’t do so well, but our present selves are doing much better. We are new people now.

Clearly, many people are unable to recognize, much less admit, the ceiling of their own capabilities. Otherwise, how could most be above average — to a degree that the very term “average” has lost its statistical meaning?

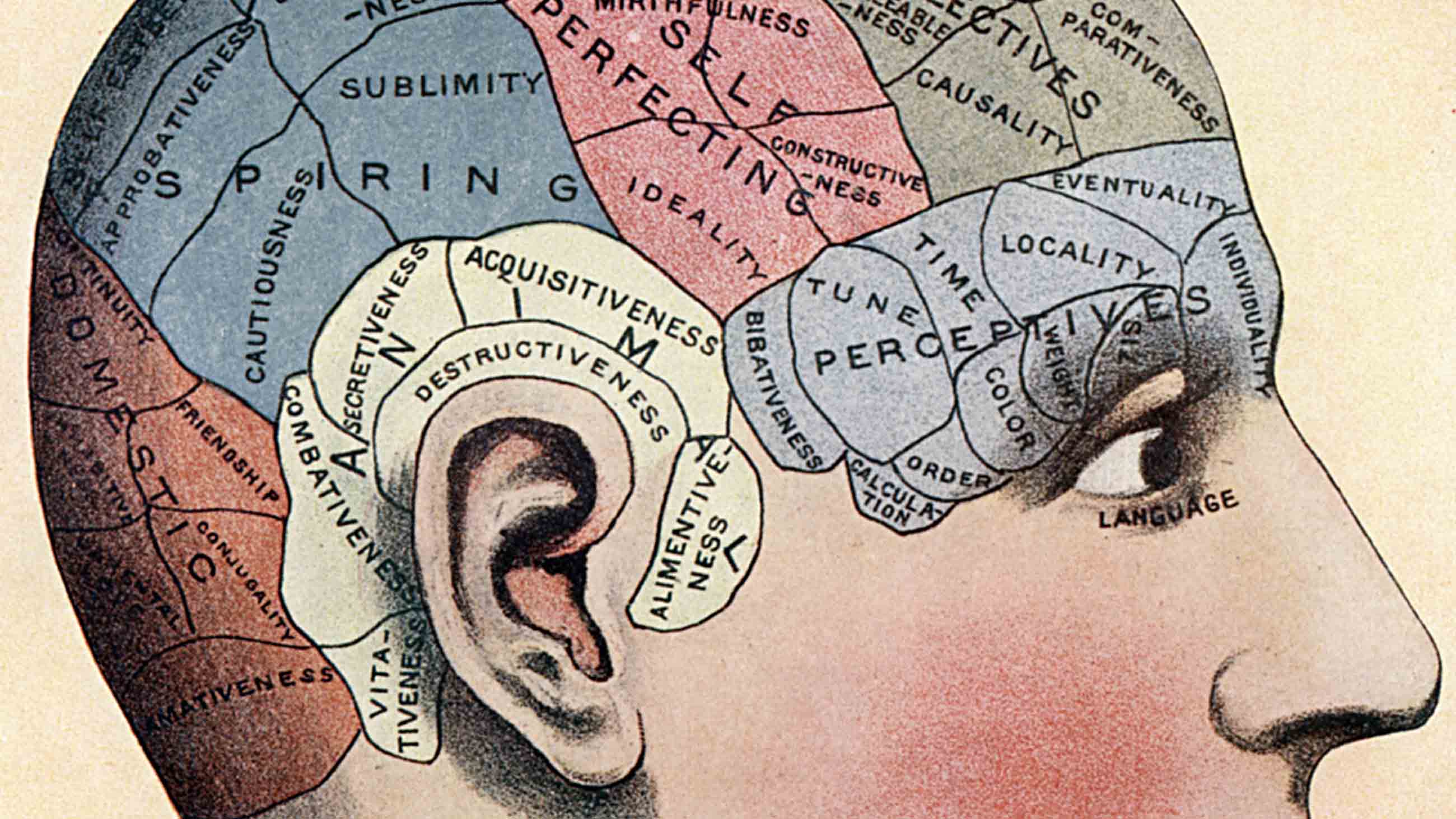

Psychologists have been engaged in a major effort to understand self-deception since the 1990s. One of the notable studies was done by Justin Kruger and David Dunning at Cornell University. The duo recruited 65 regular human “guinea pigs” — psychology undergraduates — and asked them to estimate their abilities in answering questions about humor, grammar, and logic before they knew their real scores.

As it turned out, participants who did poorly rated themselves far higher than their actual performance. This cognitive distortion was the worst for those in the bottom quarter, who overrated themselves by more than 45 percent to be near the 60th percentile.

Kruger and Dunning published the research in a 1999 paper titled “Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments.” This ignorance of one’s own ignorance came to be known as the Dunning-Kruger effect, or, more pedantically, meta-ignorance.

But if deceiving ourselves won’t do us good, why do we still do it?

We shouldn’t brush off self-deception too quickly. It appears that it evolved for a major benefit: self-healing. Praying to gods for a cure to a disease is practiced in virtually all cultures. Sometimes, “miracles” do happen through the intervention of a mythic “divine power” — or so some people believe. Perhaps more familiar to those of us who live in industrialized societies are placebos, widely known to reduce physical or mental symptoms.

Placebos through psychological processes such as Pavlovian conditioning, social learning, memory, and motivation can affect our mental state. This may in turn activate the genetic, immunological, and neural responses that are unleashed by real drugs with actual biological effects. That’s why inactive substances, words, rituals, signs, symbols, or treatments, if perceived as beneficial to our condition, can trigger a placebo effect.

Placebos are known for improving a range of conditions, including sleep, mood, a variety of diseases, and sex lives. Pain researchers have found that one of the primary pathways through which placebos work is kindling hope, which in turn can reduce anxiety. This activates the dopamine-mediated reward center in the brain, reducing a patient’s pain. When used with an effective drug (such as the painkiller remifentanil), placebos may further enhance the drug’s potency.

The placebo effect can be so strong that at times it may be stronger than the effect of the active ingredients in medicines. For example, the medicinal effect of antidepressants accounts for about 25 percent of improvement in patients with moderate symptoms, whereas the response to placebo (including spontaneous remission) accounts for up to 75 percent. Intriguingly, placebos may still work even when patients are informed that they are being treated with a placebo or a sham procedure.

Not all people respond to placebos, however; nor is it easy to predict who will. Nevertheless, a general pattern is still noticeable for those who do respond: The more expensive the placebo is in terms of the pill or the treatment, or the more invasive the treatment is, the more effective it becomes. The same can be said for the perceived sense of medical authority in therapy.

Associative learning plays a key role in the placebo effect. Besides the color, shape, and taste of pills, other factors such as the appearance of clinics, medical instruments, and health care paraphernalia, and the presence of, or interaction with, physicians and nurses, all have the potential to produce or enhance a placebo response. These settings can mentally suggest to patients that a cure is coming.

And the more patients have been exposed to these reminders of the medical profession, the stronger the placebo response will be. Patients, in concert with their immune systems, have been conditioned to respond in this way. This may explain why patients, even after being told that they are being treated with a placebo, may still respond positively.

The entire industry of alternative medicine and healing practices — such as herbal medicine, acupuncture, meditation, chiropractic, and aromatherapy — are, by and large, based on the placebo effect. Acupuncture, for example, is among the best known and most widely practiced alternative healing methods. Chinese practitioners of acupuncture have been plying their trade for three millennia. It’s difficult to dispute that it works. But it has remained a mystery as to why it works.

Although upwards of 4,000 trials have been conducted since 2017 alone, acupuncture’s actual medical effect remains unclear. As such, it is best used for its placebo effect. Indeed, in rigorous trials with randomized control between patients treated with real acupuncture (when the needle is used on the “correct” meridian points) or with fake (that is, putting the needles intentionally on “incorrect” spots) for migraine and chronic pain, researchers found no statistical difference between the real treatment and the fake control.

Some scientists adamantly discredit alternative medical treatments, equating them with snake oil. The hypothetical meridian system that underlies acupuncture has been labeled “prescientific gobbledygook.” Such views are biased.

After all, medicine is both a scientific endeavor and a healing practice. As a science, medical research must find out whether and why a drug or a treatment works by rigorously following scientific procedures in clinical trials. But as a practice to cure disease, heal wounds, and alleviate symptoms, the primary concern of medicine is whether a certain medicine or treatment works, not why it works.

Whether an alternative medicine or healing practice, such as a certain herbal remedy in traditional Chinese medicine, is useful can often elicit a spirited debate. The word “useful” can generate a great deal of confusion. In popular usage, it means something that works, as in improve conditions, reduce symptoms, or speed up the healing process.

By that definition, placebos are useful, without any doubt. In science, however, “useful” means a positive effect in addition to that shown by the placebo effect. By that definition, a drug is considered useless unless it shows an effect statistically better than the placebo. One way to lessen the confusion might be to replace “useful” with “extra useful” or “more useful than the placebo” for an effective drug.

The real concern about the use of placebos lies in the issue of medical ethics. Are physicians and therapists allowed to deceive patients? If healing is the goal, then taking full advantage of the placebo effect becomes clinically relevant and ethically acceptable. Yet, the same practices would clearly violate informed consent and constitute breach of trust between physicians and patients.

Would you be satisfied if your doctor prescribed a drug for your back pain without telling you it’s just a sugar pill? What if the placebo doesn’t work for you, or worse, works against you in what is known as the nocebo effect? And even worse, when you discover it, you may have already missed the best window of time to be treated with a real cure. For all these reasons, whether and how placebos should be used will remain controversial. What we might all be able to agree on, however, is that placebos should be given a try when there is no other medical alternative.

Lixing Sun is a distinguished research professor in the Department of Biological Sciences at Central Washington University. He is the author of “The Fairness Instinct: The Robin Hood Mentality and Our Biological Nature” and the coauthor of “The Beaver: Natural History of a Wetlands Engineer.”

Comments are automatically closed one year after article publication. Archived comments are below.

“The Dunning-Kruger effect is (mostly) a statistical artefact” and is wonderfully ironic in the context of this article.

https://www.sciencedirect.com/science/article/abs/pii/S0160289620300271?via%3Dihub