Wikipedia doesn’t exactly enjoy a reputation as the most reliable source on the internet. But a report released in June by the Wikimedia Foundation, which operates Wikipedia, suggests that the digital encyclopedia’s misinformation woes may run even deeper than many of its English-speaking users realize.

In the report’s summary, the foundation acknowledged that a small network of volunteer administrators of the Croatian-language version of Wikipedia have been abusing their powers and distorting articles “in a way that matched the narratives of political organizations and groups that can broadly be defined as the Croatian radical right.” For almost a decade, the rogue administrators have been altering pages to whitewash crimes committed by Croatia’s Nazi-allied Ustasha regime during World War II and to promote a fascist worldview. For example, it was reported in 2018 that Auschwitz — which English Wikipedia unambiguously deems a concentration camp — was referred to on Croatian Wikipedia as a “collection camp,” a term that carries fewer negative connotations. The Jasenovac concentration camp, known as Croatia’s Auschwitz, was also referred to as a collection camp.

Croatian Wikipedia users have been calling attention to similar discrepancies since at least 2013, but the Wikimedia Foundation began taking action only last year. That the disinformation campaign went unchecked for so long speaks to a fundamental weakness of Wikipedia’s crowdsourced approach to quality control: It works only if the crowd is large, diverse, and independent enough to reliably weed out assertions that run counter to fact.

By and large, the English version of Wikipedia meets these criteria. As of August 2021, it has more than 120,000 editors who, thanks to the language’s status as a lingua franca, come from a diversity of geographic and cultural backgrounds. English Wikipedia is considered by many researchers to be almost, but still not quite as accurate as traditional encyclopedias. But Wikipedia exists in more than 300 languages, half of which have fewer than ten active contributors. These non-English versions of Wikipedia can be especially vulnerable to being manipulated by ideologically motivated networks.

I saw this for myself earlier this year, when I investigated disinformation on the Japanese edition of Wikipedia. Although the Japanese edition is second in popularity only to the English Wikipedia, it receives fewer than one sixth as many page views and is run by only a few dozen administrators. (The English-language site has nearly 1,100 administrators.) I discovered that on the Japanese Wikipedia, similarly to the Croatian version, politically motivated users were abusing their power and whitewashing war crimes committed by the Japanese military during World War II.

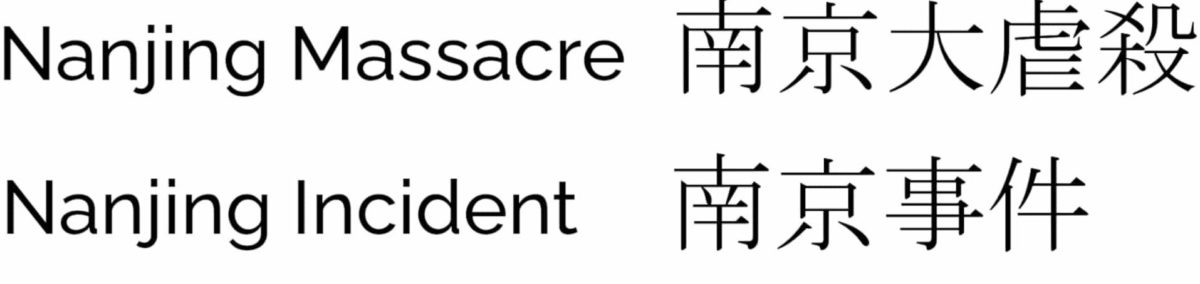

For instance, in 2010 the title of the page “The Nanjing Massacre” was changed to “The Nanjing Incident,” an edit that downplayed the atrocity. (Since then, the term “Nanjing Incident” has become mainstream in Japan.) When I spoke with Wikimedia Foundation representatives about this historical revisionism, they told me that while they’d had periodic contact with the Japanese Wikipedia community over the years, they weren’t aware of the problems I’d raised in an article I wrote for Slate. In one email, a representative wrote that with more than 300 different languages on Wikipedia, it can be difficult to discover these issues.

Two different terms for the World War II crimes committed in Nanjing, shown in English and Japanese.

The fact is, many non-English editions of Wikipedia — particularly those with small, homogenous editing communities — need to be monitored to safeguard the quality and accuracy of their articles. But the Wikimedia Foundation has provided little support on that front, and not for lack of funds. The Daily Dot reported this year that the foundation’s total funds have risen to $300 million. Instead, the foundation noted in an email, because Wikipedia’s model is to uphold the editorial independence of each community, it “does not often get involved in issues related to the creation and maintenance of content on the site.” (While the foundation now says that their trust and safety team is working with a native Japanese speaker to evaluate the issues with Japanese Wikipedia, when I spoke to them in March they told me that Japanese Wikipedia wasn’t a priority.)

If the Wikimedia Foundation can’t ensure the quality of all of its various language versions, perhaps they should make just one Wikipedia.

The idea came to me recently while I watched an interview with the foundation’s former CEO Katherine Maher, speaking about Wikipedia’s efforts to fight misinformation. During the interview, Maher seemed to imply that for any given topic, there is just one page that’s viewed by “absolutely everyone across the globe.” But that’s not correct. Different language versions of the same page can vary substantially from one language to the next.

But what if we could ensure that everyone across the globe saw the same page? What if we could create one universal Wikipedia — one shared, authoritative volume of pages that users from around the world could all read and edit in the language of their choice?

This would be a technological feat, but probably not an impossible one. Machine translation has been quickly improving in recent years and has become a part of everyday life in many non-English-speaking countries. Some Japanese users, rather than read the Japanese version of Wikipedia, choose to translate the English Wikipedia using Google Translate because they know the English version will be more comprehensive and less biased. As translation technology continues to improve, it’s possible to imagine people from all over the world will want to do the same.

Maher has previously stated that “higher trafficked articles tend to be the highest quality” — that the more relevant a piece of information is to the largest number of people, the higher the quality of its Wikipedia entry. It’s reasonable to expect, then, that if Wikipedia were to merge its various language editions into one global encyclopedia, each entry would see increased traffic and, as a result, improved quality.

In a prepared emailed statement, the Wikimedia Foundation said that a global Wikipedia would change Wikipedia’s current model significantly and pose many challenges. It also said, “with this option, it is also likely that language communities that are bigger and more established will dominate the narrative.”

But the Wikimedia Foundation has already launched one project aimed at consolidating information from around the globe: Wikidata, a collaborative, multilingual, and machine-readable database. The various language-editions of Wikipedia all pull information from the same version of Wikidata, creating consistency of content across the platform. But Mark Graham, a professor at the Oxford Internet Institute, has expressed concerns about the project. He cautioned that a universal database might disregard the opinions of marginalized groups, and he predicted that “worldviews of the dominant cultures in the Wikipedia community will win out.”

It’s a valid concern. But in many ways, the current Wikipedia already feels colonial. The site — operated by a foundation whose board of trustees are mostly American and European — has dominated the global internet ecosystem ever since Google began putting it at the top of search engine results.

Although Wikipedia may have managed, belatedly, to remove abusive editors from its Japanese and Croatian sites, the same thing could happen again; the problem is built into the system.

Creating a global Wikipedia would be challenging, but it would bring further transparency, accuracy, and accountability to a resource that has become one of the world’s go-to repositories of information. If the Wikimedia Foundation is to achieve its stated mission to “help everyone share in the sum of all knowledge,” they might first need to create the sum of all Wikipedias.

Yumiko Sato (@YumikoSatoMTBC) is an author, music therapist, and UX designer.

Comments are automatically closed one year after article publication. Archived comments are below.

Contrary to the author’s belief, increased traffic (if everyone was forced to read just machine translations of English Wikipedia) would NOT bring improved quality (because people dependent on machine translation would not be able to edit the English original). And don’t imagine something as wild as “let them each edit it machine-translated into their language and back” would ever work. Your proposal basically says that only English-speaking people should be allowed to edit and everyone else could only read.

Total bullshit. This way the English wikipedia would become the absolute truth, a kind of Vatican that decide for all of us? An ethnocentric anglocentric thing, in which what doesn’t exist in English just doesn’t exist. Could be ok for articles, les us say about life on Mars or the expansion coefficient of iron. But about history? Languages? Art ( for many people, what doesn’t exist (song, theatre, literaure…) in their own language just doesn’t exist, or is folklore, or what the anglosaxons for example call “world music”, i.e. everything that is “unenglish” but seem to exist somewhere in the ethnic jungle outside the anglosaxon world. For who makes the effort to learn and read more than one language, no really an american speciality, it is instructing to read different language versions and make up his own mind. I would not be surprised that native Americans write differently about the “discovery” of America as the Spanish would do.

I agree with Rolo. And it opens to more question: exactly which Wikipedia would be the “pivot article” for the machine translation to translate? English? If the English Wikipedia is the main Wikipedia then be ready to see a ton of hardworking editors left the community as they cannot do anything if they don’t understand English. Your model would instantly bring destruction to the community. No natives to give their opinion on non-Anglophonic articles, no more local community development, etc.

Machine translation is very bad in languages where they’re in same family. For example, a lot of machine translation for Malay ended up using Indonesian vocabulary or grammar that is not valid or not comprehensible in Malay. Using Indonesian grammar and vocabulary for Malay is like using German grammar and vocabulary for English. Until machine translation could properly translate with the correct grammar and vocabulary, it is a very bad idea for readers to depend on machine translation.

A clear example is when machine translation use the particle “adalah” with nouns in Malay when the correct particle is “ialah”, because “adalah” is NOT valid to use with NOUNS in Malay even though it might be valid in Indonesian. There’s also discrepancy in usage of the particles “di” vs “ke”, “dari” vs “daripada”, “ke” vs “kepada”.

I’m afraid the translation option really isn’t feasible. Machine translation just isn’t that good, especially for smaller languages. It also leads to centralized control from English speakers.