In the Race to Crack Covid-19, Scientists Bypass Peer Review

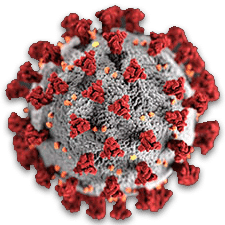

As the SARS-CoV-2 virus spreads, causing more than 800,000 confirmed cases of Covid-19 globally, researchers are scrambling to learn more about the virus. Their collective efforts have generated more than 1,900 papers, as doctors and scientists try to rapidly share new findings with each other and with the public.

At stake are urgent unanswered questions about Covid-19: Can a recovered patient get the disease again? What techniques will provide faster and more accurate tests? How effective is social distancing at slowing the spread of the disease?

That flood of research has put new strain on a scientific process accustomed to vetting and publishing new results much more slowly. In traditional publishing, papers are read by at least two experts in the field — a process called peer review — which, at least in theory, helps catch mistakes and unsound science. The back-and-forth of review and revision can take months.

Getting information out quickly during an epidemic is not necessarily something that traditional publishing has been able to keep up with. In the 2003 SARS epidemic, for example, 93 percent of the papers published about the spread of the outbreak in Hong Kong and Toronto didn’t actually come out until after the epidemic period was already over, according to a 2010 analysis by researchers at the French National Institute of Health and Medical Research.

In the context of an outbreak, Michael Johansson, a biologist at the Centers for Disease Control and Prevention and an epidemiology lecturer at the Harvard T.H. Chan School of Public Health, wrote in an email to Undark that “traditional review methods are too slow.”

In response, some traditional journals have accelerated their peer review process for coronavirus papers. But the Covid-19 crisis has also become a major test for preprints — an alternative system of publishing that had already been gaining traction among some scientists who see them as a way to make their results available as soon as possible.

With preprints, scientists submit a draft of their results to a server, which posts them online within a few days. (The paper may eventually end up in a peer-reviewed journal, too). The preprints are freely available for anyone to read — as are any mistakes, logical gaps, or omissions that might normally be corrected during peer review.

Because preprints aren’t peer reviewed, it can be difficult for non-experts — including journalists, policymakers, and scientists in other fields — to determine what is important and based on solid science, and what is not. While there’s widespread agreement that preprints can be useful, they also run the risk of contributing to the spread of faulty information.

As the Covid-19 crisis brings a flood of preprints, some scientists are now working to improve the ways that preprints are accessed, analyzed, and reported. In that effort, researchers are trying to balance the benefits of rapid access to essential new information with the threat of preprint-based mistakes that could cause panic or be otherwise harmful.

The first preprint server launched in 1991, focusing on physics papers. But preprints took longer to catch on in other fields. “If there’s a fraud or mistake in physics, there’s no direct health consequences,” says Vincent Larivière, who studies scholarly communication at the University of Montreal. “The stakes weren’t the same as it was in health science, which explains why it took more than 20 years for medical scientists to actually embrace [preprints].” In 2013, scientists at Cold Spring Harbor Laboratory in New York launched bioRxiv (pronounced “bio-archive”). MedRxiv, a sister server focusing on health sciences research, launched in June 2019.

The system has inspired criticism. In a 2018 editorial in the journal Nature, science communicator Tom Sheldon argued that preprints could undermine public understanding of science.

A month later, Sarvenaz Sarabipour, a postdoctoral fellow at Johns Hopkins University, and eight other scientists published a rebuttal arguing that given responsible reporting practices, “preprints pose no greater risk to the public’s understanding of science than do peer-reviewed articles.” Indeed, there are many cases of bad or uncertain science making it through peer review — including during the present pandemic. And the peer review process has itself been criticized for being non-transparent and for enabling reviews that are unprofessional and even cruel.

Research organizations also argued that preprints could be useful in a public health emergency, despite the risk of misinformation. During the 2016 Zika outbreak, more than 50 organizations committed to the Wellcome Trust’s initiative for data sharing in public health emergencies, which explicitly encouraged the submission of preprints. Since then, more than one hundred organizations, including several major traditional publishers, have committed to the 2020 version of the initiative.

Since the beginning of the Covid-19 outbreak, preprint submissions have grown dramatically. John Inglis, co-founder of bioRxiv and medRxiv, wrote in an email to Undark that together these two servers have already posted 1,000 preprints related to the Covid-19 outbreak.

“The more you know about the bug, and the quicker you know it, the better chance you have of developing something to counteract it,” said Richard Sever, another bioRxiv/medRxiv co-founder. “In an epidemic scenario, every day counts.”

The combination of accessibility and lack of peer review occasionally means that faulty science gets through on bioRxiv. A preprint posted on bioRxiv in late January claiming that the similarities between the SARS-CoV-2 coronavirus and HIV-1 were “unlikely to be fortuitous in nature,” was mentioned by over 17,800 users on Twitter, according to the research analytics tool Altmetric. Influential conspiracy theorist sites, including Zero Hedge and InfoWars used the preprint to bolster the conspiracy theory that the virus was a man-made bioweapon.

Scientists took action almost immediately, pointing out on Twitter, and in comments on bioRxiv itself, that, while there are some small genetic similarities between SARS-CoV-2 and HIV-1, these similarities are shared by many other viruses as well, and any overlap is almost certainly a coincidence. (Many things — for example, mice and humans — share some genetic material; that does not mean a scientist spliced some mouse DNA into humans.)

“In one respect, what seemed like a failure of preprints was actually a success, because within 24 hours, the error had been spotted by the community,” said Sever, “and then within about 48 hours [the authors] made a formal withdrawal.” The paper is still on the bioRxiv website, under a bright red banner explaining that it has been withdrawn.

For comparison, Sever noted that it took The Lancet — a prestigious medical journal — 12 years to retract the now-infamous study that suggested vaccines caused autism.

Sever said that even in cases where the preprint isn’t egregiously misleading, the community is still pretty good at picking up on smaller mistakes, like an incomplete methods section or a dataset that wasn’t posted. And scientists are usually good at self-regulating as well. “Nobody wants to get the reputation of being the individual who puts up some half-off paper and doesn’t put up all the data,” said Sever.

MedRxiv has a more stringent screening process. Claire Rawlinson and Theodora Bloom, co-founders of medRxiv, told Undark that their team has been very careful about which coronavirus-related preprints they post. “Our aim is first to do no harm,” Bloom said. All of the preprints get screened by subject matter experts, said Rawlinson, who are responsible for determining whether the preprint could cause harm if the results later turned out to be wrong. Preprints are also vetted by an experienced medical editor.

For preprints that claim to have a new cure for disease, or that might cause people to change the medications they take or avoid established tools like vaccination, the medRxiv team will generally conclude that these high-stakes papers should be peer reviewed before they get released.

Aside from screening processes, there are many other ways that organizations and individuals are trying to prevent preprint-related misinformation. After the incident with the Covid-19-and-HIV preprint, bioRxiv prominently posted a banner above each paper explaining that preprints, including those related to the coronavirus, “should not be regarded as conclusive, guide clinical practice/health-related behavior, or be reported in news media as established information.” It’s unclear whether this disclaimer will be enough to prevent some journalists and social media users from presenting preprints as finished research.

The crisis has also given rise to informal networks of review that help analyze and curate preprints after posting. Twitter, in particular, has emerged as an important platform for commentary, regarding both preprints and published papers. Trevor Bedford, an epidemiologist at the University of Washington, posted a comprehensive debunking of the Covid-19-and-HIV preprint for his 190,000 followers. Paul Knoepfler, a stem cell researcher at the University of California, Davis, has used Twitter to express concerns about preprints that suggest, with little evidence, that stem cells could treat Covid-19.

|

Got questions or thoughts to share on Covid-19? |

Scientists also use Twitter to highlight particularly useful preprints. Muge Cevik, a medical virologist at the University of St. Andrews in Scotland, has nearly 75,000 followers (picking up thousands in recent weeks) and posts regular updates of what she considers to be the most informative papers and preprints related to Covid-19.

There have also been efforts to create a more formal system for curating preprints under crisis conditions. One of the newest, Outbreak Science Rapid PREreview, allows people to request, read, and review outbreak-related preprints. However, so far, the platform has gained limited traction. Reviewers have contributed only about 25 reviews. Daniela Saderi, a neuroscientist and one of the platform’s cofounders, said that while publicizing a new platform like this one is difficult, progress is being made: JMIR publications, an open-access digital health research publisher, announced their partnership with Outbreak Science Rapid PREreview on March 27.

It’s not yet clear whether these measures will be enough to prevent the dissemination of preprint-related mistakes, and the flood of preprints has presented unique challenges for journalists who are trying to report the facts of the outbreak.

Each day, there’s a deluge of papers, preprints, articles, and government announcements and many of the people reporting on them don’t necessarily have a background in science. Rick Weiss, the director of SciLine, a free service that connects reporters with experts, says that as newsrooms have shrunk in recent years, there’s often no longer a budget for specialty reporters, including science and health reporters. This is especially problematic now, when the coronavirus plays a role in so many news stories.

“All over the country, there’s everyday local reporters trying to inform their readers and listeners and viewers about what the facts are,” Weiss said. “Many of them do not know who to turn to.” Reaching people at the CDC or the National Institutes of Health, he added, can be difficult right now.

Over the 2.5 years since SciLine was founded, Weiss said the organization has filled more than 1,000 reporter requests for scientists and that demand has more than doubled during the outbreak.

“The prime risk of the media publicizing preprints is that additional work will prove those initial results wrong, and we all know how difficult it is to ‘undo’ the promulgation of wrong information,” Weiss wrote in a follow-up email. “There is no ‘control Z’ in journalism.”

But, for many researchers, the risk of error is worth it. “We often have to act with imperfect information,” wrote Naomi Oreskes, a professor of the history of science at Harvard who has written extensively about peer review and public trust of science, in an email to Undark. “Covid-19 is an extreme case, but any case where people’s health and safety are at stake requires us to do the best with what information we have.”

Hannah Thomasy is a freelance science writer splitting time between Toronto and Seattle. Her work has appeared in Hakai Magazine, OneZero, and NPR.

Comments are automatically closed one year after article publication. Archived comments are below.

Hannah relax. Use the KISS principle. Enter into a data base and use an AI program to get your answers. Forget about preprints, revisions and peer reviews until later