Welcome to the reboot of The Undark Podcast, which will deliver — once a month from September to May — a feature-length exploration of a single topic at the intersection of science and society. In this episode, join multimedia producer Eliene Augenbraun and podcast host Lydia Chain as they untangle the complexities of precision medicine — and the technological, ethical, and logistical hurdles stakeholders must clear to bring it to fruition.

Below is the full transcript of the podcast, lightly edited for clarity. You can also subscribe to The Undark Podcast at Apple Podcasts, TuneIn, or Spotify.

Eliene Augenbraun: Michele Disco was not having a good year and it was about to get worse. She was getting a divorce and had a young child at home. Then her doctor found a lump in her breast. After it was biopsied …

Michele Disco: I remember the doctor telling me that what they found was malignant — so not using the word “cancer” — so I’m thinking malignant, malignant, that’s bad. Malignant is bad. What do you mean by malignant? And that’s when they said “cancer.”

Eliene Augenbraun: Disco was really concerned, but her doctor reassured her that there was a treatment designed just for her, featuring a cocktail of drugs targeted at her specific type of tumor. The treatment worked.

Michele Disco: It felt really great to know that there was something that was targeted for what I had that was potentially life-saving.

Eliene Augenbraun: But Disco’s experience of getting drugs targeted to her exact cancer is not what most patients experience. Here’s New York University bioethicist Arthur Caplan.

Arthur Caplan: There are diseases — breast cancer being in the lead — where markers have been identified and risk predictions can be offered and sometimes medicines used or not used, but, you know, generally speaking we’re still not in the era of precision medicine yet — no matter how many people say so.

Lydia Chain: This is the Undark Podcast. I’m Lydia Chain. Precision medicine promises treatment designed around you as a unique individual — the right treatment for every disease you might ever have, taking into account everything that makes you you: your age, genetic profile, specific disease, co-existing conditions and more. But to make the leap from the system we have now to true precision medicine, we’d need more data, a lot more data. As researchers and drug companies try to build databases that hold the medical, prescription, and lifestyle data of millions, even billions of people, questions about privacy, bias, and ethics challenge that promise of precision medicine. Eliene Augenbraun has the story.

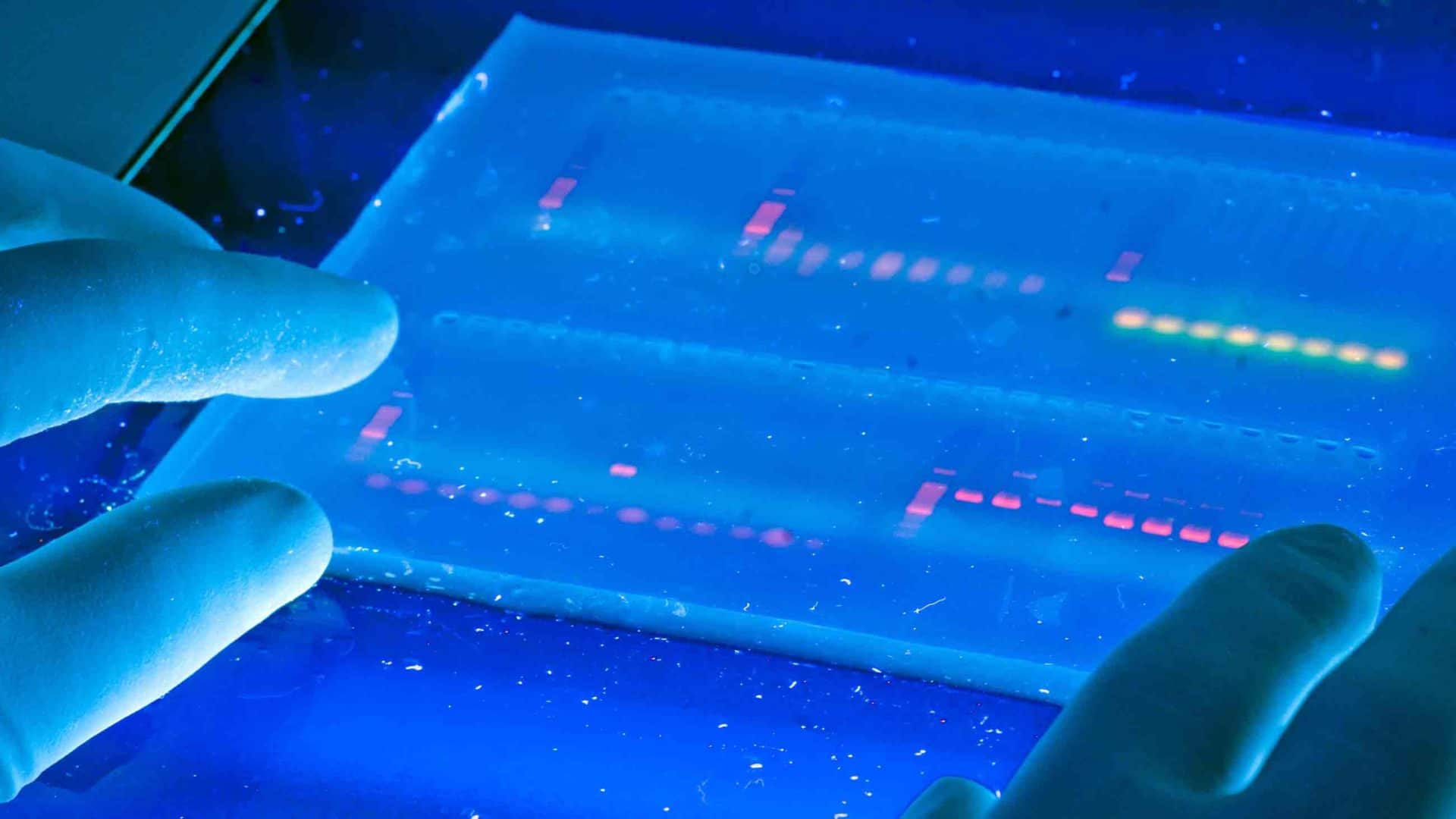

Eliene Augenbraun: The pharmaceutical industry is investing heavily in precision medicine. Billions of dollars are being spent to identify “biomarkers”: proteins, genes, or measurements that distinguish one disease from another. A biomarker may be a gene associated with a particular disease or a protein shed into the blood as a disease progresses. Even more billions are being spent to develop drugs based on clues hidden in those biomarkers. Nancy Dreyer is an epidemiologist and chief scientific officer at IQVIA, a contract research organization that develops and tests drugs in clinical trials.

Nancy Dreyer: Precision medicine has to do with precision therapeutics targeted to a particular condition organ, location, situation. Because it’s not just the tumor target — so do I have HER2 positive breast cancer — but do I also have diabetes and hypertension?

Eliene Augenbraun: Designing a drug that hits a target molecule in a Petri dish is the easy part. But how do we find biologically meaningful biomarkers and drug targets that actually cure the disease? How do we tailor treatments to particular kinds of people? Discover weird side effects? We could test every new treatment, every new version of a treatment, in a traditional clinical trial. But the costs would be very high. Here’s Sharyl Nass, Director of the National Cancer Policy Forum at the National Academy of Sciences.

Sharyl Nass: You know if you have to do that for every agent that you’re testing and every different type of cancer, it just becomes impossible.

Eliene Augenbraun: Instead, the health care industry wants to collect information from very large populations — medical records, pharmacy orders, and any other health-related data they can get. Public health experts can then use sophisticated math tricks to tease out patterns. We’ll get to that later. But first, let’s talk about how drugs have made it to market since the end of World War II.

In the U.S., the Food and Drug Administration — the FDA — reviews and approves new drug applications based on animal and lab studies followed by clinical trials. Potential drugs are first developed in the lab — in test tubes and Petri dishes, then tried out in animal models. In 1946, the first “randomized, double-blind” clinical trial was conducted in the U.K. for the antibiotic streptomycin. “Randomized, double-blind” means that patients are assigned to each treatment randomly, and doctors and patients don’t know who is getting which treatment. Since then, drugs have been tested in humans in phases — Phase I, II, and III clinical trials. Phase I proves a drug is safe in people. Phase II shows whether the treatment actually works. Phase III determines whether the new treatment works better or is safer than older treatments. A Phase III randomized, double-blind study requires hundreds or thousands of people. Some trials take a long time to start up.

Sharyl Nass: It takes as long as two years sometimes to launch a new study

Eliene Augenbraun: Dreyer worries that Phase III clinical trials are so expensive we can’t conduct very many.

Nancy Dreyer: For example, you make a new drug for diabetes in the United States today, you must do a study of cardiovascular safety. That’s required. If you did that in a typical fashion that trial tends to cost — and I hope you’re sitting down — but the cost for those often exceed a half a billion dollars. So that’s billion with a B.

Eliene Augenbraun: And here is the surprising thing. Most drugs turn out to fail at some point during clinical trials. Only 3 percent of cancer drugs tested in humans make it to market, though treatments designed to target a very specific biomarker are far more successful — all the way up to 8 percent.

Nicola Toschi: The point is that precision medicine is only just barely born, right? So when it’s actually there, the way it’s thought of and the way, you know, people are trying to make it happen, it will be accurate. If it’s not accurate, then it’s not precision medicine.

Eliene Augenbraun: That’s Nicola Toschi, a mathematician and physicist at University of Rome and Harvard Medical School. Even with that low level of success, doctors can still choose from multiple treatments. They just don’t have the data to guide them about what to do in each circumstance.

Sharyl Nass: There are a thousand of them in development right now for cancer. If we put too many into the clinical space and we don’t really know how to use them, then physicians will be faced with a very difficult situation with the patient right in front of them. There may be six different options to treat that cancer, but they don’t know which one is actually best for that patient.

Eliene Augenbraun: That is the challenge for cancer, for which we already know a large number of biomarkers — like HER2 for Michele Disco’s breast cancer. But we don’t have highly specific biomarkers for brain diseases because they may require invasive tests like spinal taps. Worse yet, for many diseases, there are no good biomarkers to predict whether a patient will deteriorate quickly, slowly, or not at all. Researchers need to find …

Nicola Toschi: Subtle clues that differentiate one patient from another, uh, that may actually predict, you know, why two patients that look identical to a traditional clinical workup will take two opposite trajectories and why somebody may die earlier or slower, and so on and so forth.

Eliene Augenbraun: To bring down the cost of clinical trials and find more subtle biomarkers …

Nicola Toschi: You will need maths. It’s able to detect patterns.

Eliene Augenbraun: Mathematicians look for patterns or signals, which they call “features.” Computers can be trained to search for features that are too subtle and complex for humans to see. One type uses …

Nicola Toschi: Artificial intelligence techniques, which are called, broadly feature reduction or feature selection techniques, which will attempt to sort of filter out the most informative features. A feature is in this case, a biomarker.

Eliene Augenbraun: Feature reduction works something like this. Say this jumbled music represents patient data — blood pressure, liver proteins, kidney function, cognitive scores — as notes encoded for pitch, frequency, duration, and so forth. An untrained AI computer easily finds a pattern. The scientist checks this pattern — or feature — against the data. Say he finds out that it indicates something irrelevant to the course of the disease, like in which center a patient got an MRI scan.

Nicola Toschi: Maybe one center has a different machine or maybe another center the scanner is in a different floor. Where the difference is only in the, you know, the voltage of the current that was powering the scanner …

Eliene Augenbraun: Which has no bearing on their prognosis or treatment.

Nicola Toschi: What I would do in this case is I would first train the algorithm to actually detect the center in which the patient was scanned. And then I would take whatever features that algorithm has determined are most distinctive of the center and I would eliminate them from the next round of search. Once you learn them, you can exclude them from the model.

Eliene Augenbraun: In the next round of search, by excluding the known pattern, other patterns are easier to find. Mathematically speaking, scientists want to reduce all the many possible features down to a very few. From those few features, scientists can determine how a biomarker relates to a disease. If the biomarker is medically relevant to the disease — like the HER2 receptor for breast cancer — then it could be a good drug target. But — and this is a big but — the quality of that model depends on the quality of the data.

Nicola Toschi: It needs to be the most informative possible. Otherwise, it’s, you know, what people call “rubbish in rubbish out,” you know, whatever you put in the middle, even if it’s the best deep learning algorithm in the world. The amount of effort in the algorithmic side should definitely be paralleled by, effort, probably even larger effort in data collection, data curation.

Eliene Augenbraun: So where do scientists look for good, well-curated data? Traditionally, data from clinical trials have been considered the gold standard. But with new AI techniques, there is an opportunity to learn from treatments in the real world with a lot of people over a long period of time. Nass says …

Sharyl Nass: the bigger the dataset, the more answers you can get.

Eliene Augenbraun: And the way to get a lot of data, say for cancer treatment, is to use …

Sharyl Nass: Real-world clinical evidence. So these are patients that are just being treated for cancer. They’re not in a clinical trial, but we’re collecting all of their data in a clinical setting …

Eliene Augenbraun: All their diagnoses, lab results, and treatments from their medical charts …

Sharyl Nass: And you can sift through all that data to try and find signals there, as well. And I think that could be a really powerful new tool that’s available for drug development or drug assessment.

Eliene Augenbraun: Phase III clinical trials require lots of patients who do not get the new treatment. Instead of recruiting them for the trial, data scientists want to cull through very large databases to find patients just like the patients enrolled in the clinical trial and compare their outcomes to patients getting the new treatment. That would reduce the number of people needed for the trial and thus reduce its cost.

Nancy Dreyer: Now there’s some new work going on that’s showing that you can actually randomize a population and if you have enough of them that can be followed through electronic medical records, you can bring that cost from $500 million to under $50 million.

Eliene Augenbraun: Even more intriguing is what happens after a new treatment becomes widely available. Looking at real-world clinical evidence could help refine that treatment, identify rare side effects, and provide clues to even better drugs. Here’s Caplan again …

Arthur Caplan: We study drugs to make sure they’re safe and effective. The FDA demands data and then approves them but we don’t track things once they get out into the real world, once we get out into real populations. We have to improve that. If you’re going to be precise, it’s not enough to say that a drug works on a relatively healthy population of people who are 20 to 50 years old. You want to know what it’s going to do to 78-year-old people with three diseases who are, you know, Chinese Americans. Did it work there?

Eliene Augenbraun: But not all clinical settings collect the same data or in the same format, making it hard to merge many datasets and analyze them as one dataset. The problem is called “interoperability.” Nass explains.

Sharyl Nass: Well, the government has been putting out guidance and regulations that are supposed to help us get to interoperability of electronic health records. I think progress has been slower than anticipated, but there are actually ongoing efforts to try and standardize some of the data collection in clinical care.

Eliene Augenbraun: In the U.S. and many other countries, every disease and treatment is assigned a code in electronic medical records. These codes can be used by doctors and insurance companies to track how individual patients are doing.

In one grassroots effort, called Minimal Common Oncology Data Elements or mCODE, international professional societies are weighing in on what codes would be most useful to oncologists to figure out which treatments work for which people. Data from medical records can be extracted, removing the name and personal identifiers, and sent to central databanks. All people who shared a code for a particular disease — say prostate cancer — would be lumped together into one database.

Sharyl Nass: It’s primarily professional societies who are taking the lead on this because they recognize that, to advance their field, they really need to tackle this. And so if they can agree among themselves, you know, among the clinical experts what, what they think are the key data elements for taking care of cancer patients, and they can specify exactly how we should refer to those and how they should be collected in the electronic health record, I think it would do a lot to advance where we are.

Eliene Augenbraun: For example, not all doctors name or track a disease or treatment the same way. mCODE would change that for oncologists on a global scale. In the U.S., another system — “the Sentinel System” — was launched by the FDA in 2016. In late 2019, dozens of health care partners — including universities, hospitals, insurance companies, biotech companies, CROs, and others — set up three large data centers to collect health records from multiple sources. Dreyer’s company, IQVIA, is a co-lead for one center focusing on community building and outreach.

Nancy Dreyer: We cover a lot of people, hundred million people under the Sentinel System so we can study drug safety and effectiveness.

Eliene Augenbraun: That’s almost a third of the United States. But she’d like even more people to be included in her databases.

Nancy Dreyer: One of the things I’m passionate about is working right now with regulators around the world as we start to adapt regulatory guidance for use of new medicines and approval of medicines to use more of this real-world evidence.

Eliene Augenbraun: So, she dreams of expanding the number of standardized diagnostic and treatment codes to all health care systems, globally. Caplan points to the positive ethical dimensions of including people of all races, nationalities, and income levels in one database.

Arthur Caplan: If you’re really going to treat everybody equitably, you’ve got to get enough samples so that you can figure out what biological differences make a difference in what subgroups. But the only way to do it is to have a worldwide effort merging international databases.

Eliene Augenbraun: But privacy is a concern when vacuuming up large amounts of patient data.

Nicola Toschi: And obviously there’s a large barrier and rightly so in collecting data from patients. Right?

Eliene Augenbraun: Medical records are digital; our lives reduced to datasets. And we are not always fully informed about how our data will be used. Up until now, patients were supposed to be informed of and consent to all aspects of care — “informed consent.”

Arthur Caplan: Well, I think informed consent is a good standard to have but I’m not sure it’s working very well with the era of big data in medicine. Big data has been collected without informed consent by all kinds of companies and organizations, and we know that, whether it’s Twitter or Uber or Facebook collecting our data. What’s happening now is some of those companies are starting to make alliances with hospitals that have big datasets where we go or with companies like insurance companies or CVS or other drug stores which have been collecting information on us forever, you know.

Eliene Augenbraun: Not all of these companies have our best interests in mind. They may want to sell us things we don’t want. They may not care if they reveal family secrets. There may be law enforcement issues from stalkers to scammers. They may accidentally release private information to people, employers or others, that we wish did not know us so well.

Arthur Caplan: There’s also genetic information as big data. And the reason that’s different is informed consent, in the old days, presumed you were going to control information about you. But genetic information, those tell you things about other people. They can tell you things about your biological relatives. They can tell you things about non-paternity. That could be good, but it could be bad.

Eliene Augenbraun: Interoperability — being sure that all databases use the same codes and format so they can be merged — is no longer Dreyer’s biggest problem, which might erode privacy even further.

Nancy Dreyer: On the one hand, as a population scientist, that would be such a gift if every everyone everywhere recorded diseases and drug treatments using the same coding standards, but we’ve grown more sophisticated than we used to be, where interoperability matters. Data linkage matters, but I can transform your data any way you keep it into something usable. I can take your data in Sentinel format or in another standardized format and I can make sense out of them because that’s what our data science allows us to do these days.

Eliene Augenbraun: So data scientists can combine one dataset with another, in many cases using artificial intelligence to mix similar types of data into one merged database. This is useful to merge records from different doctor’s offices. What about combining doctor office records with pharmacist and insurance records?

Nancy Dreyer: For example, in the company that I work in — IQVIA — we use a standard algorithm everywhere that takes things like my name, my date of birth, a variety of factors that would never be recorded in any database for research purposes, and it generates a unique encrypted ID.

Eliene Augenbraun: That way, researchers can extract medical data without identifying individuals.

Nancy Dreyer: Now, it’s not perfect but it’s pretty darn good. I think our error rates are under 5 percent and some say close to 2 percent but that allows us to take data from outpatient pharmacy prescriptions and link it with other data on health insurance claims, and in some cases there is the ability to link some or all of those data with electronic health records — if a patient has given permission for that. But recognizing that if you can be pretty sure you’ve linked say those prescriptions with that health record, all of a sudden, I’m in public health science heaven.

Eliene Augenbraun: These databanks know your gender, age, diagnosis, and treatment from your medical records. CVS also assigns you an identifier, different than IQVIA’s, but they also retain your gender, age, prescriptions, and your insurance ID. The insurance company knows what IQVIA and CVS knows. Given this information, IQVIA can accurately assemble one merged record 95 to 98 percent of the time, especially if the patient consented to sharing their records.

Nancy Dreyer: To put your mind at ease Eliene, in some of those examples, with randomization, you’re getting people’s permission to use their follow-up data, so this doesn’t have to be “everything’s in the cloud and we just suck up your private health care data.” There are plenty of ways that people consent, can consent and many situations where they should consent and they must consent. But the privacy laws in the United States are not regulated by the FDA. So it’s going to take a big, and it’s such a sensitive area. I’m not sure how we move forward.

Arthur Caplan: The challenge to informed consent is the big datasets already exist and companies are trying to merge them and correlate them, sometimes with an eye toward improving your health, sometimes with an eye toward marketing products. Sometimes with an eye towards trying to figure out what sort of incentives they might put before you to get you to use non-health care products. So that hasn’t been consented to, and it’s already going on. And the question is what to do? Should we stop, and re-consent everybody or are we just going to say that these enormous datasets are already out of our control? Which, by the way, I happen to think is true.

Eliene Augenbraun: Industry experts are keenly aware: To reap the benefits of big data they will need to address the public’s privacy concerns. But even if they figure that part out, there’s another problem — making sure that bias doesn’t creep in. There are several recent examples of how introducing AI into law enforcement and mortgage risk calculators reinforces racism, sexism, and other institutional forms of inequality. It’s not that the algorithms are racist or sexist, but the data being used includes and perpetuates the effects of racism and sexism. Listen to this piece of music. And listen to the feature the AI algorithm finds. Now, let’s introduce bias by replacing every third note with a tone that is one tone higher. The AI can still pull out a feature, but it is a different feature. Same AI, different answer.

Nicola Toschi: I can understand that it can be sort of shocking, but it’s almost, I don’t want to say trivial, but it’s almost a given in data science. You need to make sure that to the best of your knowledge, you’re incorporating enough relevant information in your dataset, even if you don’t know exactly where that information is.

Eliene Augenbraun: When it comes to health care, poor Americans are likely to have worse outcomes than rich Americans. The average African American or Native American or Hispanic patient is poorer than the average white or Asian American. So just like our biased note change example, it is possible — likely — that AI will find differences between African Americans and Asian Americans that merely reflect their income level. That will not be helpful for developing precise treatments. Experts will need to figure out which patterns relate to income and which to biology.

Sharyl Nass: Ultimately it’s going to require a very close collaboration between people who have deep clinical expertise and those who have deep analytical expertise.

Nicola Toschi: So, you know, it’s much more of an art and of a craft than I guess one could imagine, especially machine learning.

Sharyl Nass: You need to have people who can take a look at what’s coming out of those, uh, analytical algorithms and make sure that they actually make sense or at least to test them because we don’t want to have a black box algorithm that only, you know, one very sophisticated data analyst can understand and then just apply that broadly within the clinic.

Eliene Augenbraun: Everything worth knowing about our medical past — genes, diagnoses, treatments — is being deposited into databanks. Like a bank vault full of money, these deposits can be invested to make health care more affordable and more effective. In data as in life, we are most valuable when we are most connected. But so-called precision medicine can only unlock its precision if the pharmaceutical industry, research establishment, governments, hospitals, and all other stakeholders address the challenges of clinical trial costs, biased data, security, privacy, and fairness.

Sharyl Nass: We’re in an era now where we can collect almost infinite amounts of data, and apply incredibly powerful algorithms to analyze those data. What happens in a Petri dish with cancer cells is not always relevant to patients. Even what happens in whole animals, like mice, may not be relevant to cancer patients. So that’s why we really need to have more data either via clinical trials or from real world clinical evidence, as I described earlier.

Lydia Chain: Eliene, thanks for joining us.

Eliene Augenbraun: My pleasure and my honor.

Lydia Chain: Precision medicine has been a march towards collecting more and better data, and then using it better to address a variety of health concerns. How are those tools being used during this Covid-19 pandemic?

Eliene Augenbraun: Probably in many ways. One way is that there’s been more openness about data and sharing data more quickly. That’s been a trend that’s been going on for the last half a dozen years. Covid-19 is an example where data shared widely is actually changing the course of the pandemic. We knew the structure of the coronavirus proteins, like, right away. We knew the infectivity from Wuhan before it hit in the United States. We knew about symptoms from Italy before it overwhelmed the health care system in New York. These are almost real-time reports coming out in an open-access kind of format. And so by having preprint servers, more open access research papers so that everyone has access to the data. There are open access databases, all different kinds of databases. The U.S. government, for example, has assembled a bunch of industry leaders to make machine readable papers and then apply artificial intelligence using semantic search and other kinds of AI techniques to look for patterns in the publications that are coming out so rapidly. And these are all, you know, the data analysis techniques, the combining of databases, the attempt to get interoperability to work, all of these things that have been designed in the service of precision medicine, now they are being co-opted to address the pandemic.

Lydia Chain: One topic you touched on in the story is regulation — what is the role of regulation in this and what kinds of questions might regulators be asking?

Eliene Augenbraun: Regulation has to be addressed at local, state, national, and international levels. It’s easy to imagine that a regulation that protects privacy in Europe like GDPR is very, very helpful, but it doesn’t protect you in the United States. United States has slightly different laws. The kinds of issues that need to be addressed at whatever the appropriate level is, you know, can the insurance companies, for example, use your genetic data to discriminate against you, or, you know, even give you better rates? Can a state government deny you a driver’s license if they know you have a risk of macular degeneration? Can an insurance company deny you life insurance based on your genetic profile, if you happen to have a couple of oncogenes? If you have a website that has data that will allow you to identify people, how private are those data when they get combined across state lines, country lines? It’s important to really, you know, figure out all levels of protection for people’s privacy and protection for people from discrimination.

Lydia Chain: One of the points that Arthur Caplan brought up was the idea of informed consent. As a person is considering giving up or even selling some of their medical data, what kinds of questions might they be thinking about, what questions should they be asking?

Eliene Augenbraun: Informed consent is the idea that you actually understand what you’re consenting to and that the person to whom you are consenting is telling you the truth about what you’re consenting to. So, for example, if you agreed to have surgery on your appendix, and they remove your left finger, yes, you agreed to have surgery, but not that surgery. So, I think Caplan’s point is that in this age of, you know, we don’t know what will be done with the data, but we know there can be semantic search, we know there can be combining your data with a bunch of other people’s data. In the media business, when we would ask people to sign a release form for an interview, we would ask them, you know, we want you to agree to letting your voice be used on the radio, we want you to agree to use your image on television, then there’s video online, social media, we want social media, educational media, airlines. It got added on and on and on and on and on, all of these permissions that we needed to ask people. And so eventually those forms changed to be very, very short. Will you give us the right to use this interview in any way we feel like, now or forever more? And, you know, people object to that. So, we need to rethink what informed consent means.

Lydia Chain: Eliene Augenbraun is a multimedia producer based in New York. She created some of the supplemental music in today’s episode. Additional music is by Kevin Macleod at Incompetech. Our theme music is produced by the Undark team, and I’m your host, Lydia Chain. See you next month.