Aman wakes up in a cheap motel room. He doesn’t recognize the place. He doesn’t know how he got here, or how long he’s been here. He rummages through the drawers — nothing. He looks in the mirror. His body is covered with tattoos, like “Find him and kill him” and “She is gone. Time still passes” and “John G. raped and killed my wife” and “Never answer the phone.” He finds a handful of Polaroid photos, mostly of people, scrawled with cryptic notes, including one that says, “He’s Teddy. Don’t believe his lies. He’s the one. Kill him.”

This article is excerpted from Michael D. Lemonick’s new book, “The Perpetual Now: A Story of Amnesia, Memory, and Love,” being published this month by Doubleday, an imprint of The Knopf Doubleday Publishing Group, a division of Penguin Random House LLC.

That’s how the 2000 movie “Memento” begins. Before long, you learn that Leonard, the man in the motel room, has anterograde amnesia. Like the real-life amnesics Henry Molaison (the much-studied “Patient H.M.”) and Lonni Sue Johnson, the artist whose case I’ve been reporting, he can’t form new memories. But in Leonard’s case, he remembers everything that happened before his brain injury with crystal clarity. You learn that his every waking hour is devoted to a mission. He’s determined to get revenge on the man who raped and murdered his wife and cracked Leonard on the skull, causing what he calls “my condition.” Since he can’t remember anything that happened after his brain injury, he takes photos, scribbles notes, and has himself tattooed with essential information that will help him recall any information he learns about the killer.

As with almost any movie, some of the scientific details are dubious. In the real world, victims of anterograde amnesia almost always suffer as well from some degree of retrograde amnesia, the inability to bring up memories from before the injury. The loss tends to be far more complete for autobiographical memory than for semantic memory (the memory of general facts about the world), and it’s most acute for the years preceding the onset of amnesia. Just as with Alzheimer’s patients, the distant past is clearer than the recent past. Yet even though the attack happened literally seconds before he got the brain injury that robbed him of his memory, Leonard recalls not just that his wife was murdered but also the specific autobiographical details of how it unfolded. Not only that: He is also fully aware that he has anterograde amnesia. He keeps telling people about what he calls “my condition,” although he keeps forgetting that he told them. Real amnesia victims don’t have that insight. In the early years, Lonni Sue Johnson might concede that she had a minor memory problem. Now she doesn’t admit to any problem at all. “I think my memory is pretty good,” she has told me more than once.

Still, the representation of amnesia isn’t bad for Hollywood. Unlike, say, “50 First Dates,” which clinical neuropsychologist Sallie Baxendale of University College London has written “bears no relation to any known neurological or psychiatric condition,” “Memento” gives at least some sense of what amnesia must feel like. It does so through the trick of putting scenes in reverse chronological order. When you see something unfolding on the screen, you have no idea what came before. “What they captured extraordinarily well,” neuroscientist Neal Cohen said when I visited him at the University of Illinois, “is the sense of a little island isolated from the rest of time, in which all is unconnected to the next thing or the thing that preceded it.”

The fictional Leonard does things that would be impossible for a real amnesic, but that doesn’t make him entirely unrealistic. At least one amnesic in the literature also does things no amnesia victim should be able to do. The pseudonymous “Angie,” whom Cohen has studied, was a 29-year-old special education teacher working on her Ph.D. when she had a bad reaction to a flu shot and went into anaphylactic shock. Unfortunately, she was driving at the time. She slammed into a telephone pole and sustained major damage to her medial temporal lobes, just as Henry Molaison did in surgery and Lonni Sue Johnson did from encephalitis. Angie came out of the hospital with severe anterograde amnesia. This was in 1985, and without access to treatment she floundered, eventually losing her friends, her fiancé, and her job.

“It’s a terrible story,” Cohen said. “Except she was a remarkable person. She is a remarkable person.” Bit by bit, Angie built a new life. She moved to a new town. She went back to graduate school. She met someone, got married, and helped raise the man’s three children from a previous marriage. She got a job as a project manager for an educational testing company, and did it successfully. She developed several new, close friendships.

“You say, ‘Oh, come on. How is that possible?’” Cohen said. “But if you go to her house, she looks like any other overburdened soccer mom. Little stickies everywhere, notes everywhere.” She uses the same tools you or I would use when we have a million things going on. “And then, she’s extraordinarily good at structuring her life to be as orderly as it could possibly be.” Through relentless practice, for example, she learned the driving routes to the supermarket and back, and from home to the kids’ schools — though she couldn’t link them together, couldn’t drive from the supermarket to the school. And if there was a detour along one of the familiar routes, Cohen said, “forget it. She just had to drive back home.”

At work, Angie was equally organized. If you asked her what she did for a living, she’d answer, “I manage projects.” If you asked her what kind of projects, she would have no idea. She couldn’t tell you what the job entailed, or the names or any other facts about the people she worked with. Nearly any facts, anyway. She did tell the scientists that she had to watch out for one of her coworkers because “she’ll stab you in the back,” but couldn’t say how she’d come to feel that way. She didn’t know what her salary was. Again, said Cohen, “you’re thinking, ‘How is that possible?’ The answer is that she was good. She was really, really good.” She was a master at creating systems that served as external memories to replace the functions she’d lost — extensive notes recording her thoughts, color-coded folders, a heavily annotated calendar, all with multiple levels of redundancy. In order to have as normal a life as possible, Angie was determined to come across as normal. There was no way she was going to let her coworkers know how profound her impairment was. In staff meetings, she would have her subordinates report, one by one, on the progress of their projects. While they talked, she took notes and then reiterated their statements. For her, these were overt strategies for compensating for her memory impairment. For her employees, these actions were interpreted as an open leadership style in which their boss provided validation of their ideas and suggestions.

To put it bluntly, Angie is a gifted con artist, albeit with an honorable motive. She’s acutely aware of her limitations, and uses her sharp intellect and unusually high social intelligence to seem as normal as possible — and she pulls it off. In the course of their research, members of Cohen’s lab talked with her about the unrelenting challenge of keeping up appearances. It was clear that she found it exhausting. She ended up taking early retirement, and she stopped participating in neuroscience research.

Lonni Sue Johnson, whose hippocampus was severely damaged by encephalitis, is more densely amnesic than Angie, but she has also latched onto systems that give her life some sort of structure. (In “Memento,” Leonard’s system was to have information he needed to remember tattooed on his body.) One of these is puzzle-making — a self-assigned “job” at which she works with relentless focus, enthusiasm, and joy. Another is her schedule. Once she became immersed in her puzzles, she needed to know when she was likely to be interrupted for things like meals and doctor visits. She carries her schedule everywhere and consults it several times an hour, checking off things as she completes them and adding new items as she learns about them. With no memory of what she did yesterday and no sense of what she’ll do tomorrow, the daily schedules give her an artificial timeline.

This lack of a time sense appears to be common in profound amnesia, but it wasn’t something neuroscientists thought much about until Kent Cochrane came along. Cochrane, a Canadian, suffered a severe head injury in a motorcycle accident in 1981, at the age of 30. When he finally came to full consciousness, he had lost his ability to form new memories, just like Molaison, and his memories of episodes from his past had been almost entirely destroyed. Yet his semantic memory was largely intact, and he was otherwise cognitively normal.

At the time, the distinction between episodic and semantic memory was a new one. As early as 1890, the Harvard psychologist William James had talked about the difference between short-term and long-term memories, and in 1949 Gilbert Ryle had distinguished between implicit (or procedural) and declarative memory — “knowing how” versus “knowing that.” But it wasn’t until 1972 that Endel Tulving at the University of Toronto first used the term “episodic memory,” in his book “Organization of Memory.” He began to study Cochrane a decade later.

Episodic memory is great for telling stories about things that happened to you long ago. Many of the basic memories we rely on to get through life aren’t episodic, however; they’re memories of how to walk and talk and get dressed and recognize people we love and get from here to there. The English amnesic Clive Wearing, a musician written about by Oliver Sacks, had an episodic memory devastated by encephalitis, but he could still recognize his wife and remember how much he loved her. He could still conduct a choir and play the piano. Similarly, Lonni Sue can still read, write, play word games, play viola, and draw like a bandit. The fact that she can’t remember her past except in generalities is a tragedy of sorts, but how essential is it, really? The fact that she can’t form new memories is the more acute problem.

Tulving noticed something else about Kent Cochrane, however, that may be a clue to why episodic memory is so crucial. “One revealing thing that Tulving pointed out,” said Morris Moscovitch, also of the University of Toronto, “is that loss of episodic memory not only affected Cochrane’s memory of the past but also impaired his ability to imagine the future. He couldn’t describe what he was going to do in a week’s time in any more detail than he could describe what he did a week ago.” The same had been true of Henry Molaison, and it would turn out to be true of Lonni Sue as well. If you asked Henry what he was going to do the next day, he would invariably answer, “Whatever’s beneficial.” He didn’t really have any idea.

Tulving thought this was a big deal. “Unidirectionality of time,” he wrote in a 2002 article in Annual Review of Psychology, “is one of nature’s most fundamental laws. . . . Galaxies and stars are born and they die, living creatures are young before they grow old, causes always precede effects, there is no return to yesterday, and so on and on. Time’s flow is irreversible.”

With just one exception, he wrote: human memory — in particular, the memory of specific events from our past. What distinguishes our episodic memory from our semantic memory, Tulving argued, was that the first is a form of mental time travel. You don’t just recall facts about yourself when you bring up an episodic memory; you re-immerse yourself in the past. For a short while, it’s as though you’re living there once again.

When Aline Johnson, Lonni Sue’s sister, whom I’d known in middle school and who knew I was a writer, walked up to me on the street in Princeton to tell me for the first time about her sister, it put me right back in school for just a few moments, complete with sights, sounds, and feelings. For that to happen, according to Tulving’s theory about episodic memory, three things needed to be true. First, I had to have a sense of myself as existing in time, with a past, a present, and a future. There’s no evidence that even the most sophisticated animals have this sense. “They have minds, they are conscious of their world, and they rely as much on learning and memory in acquiring the skills needed for survival as we do,” Tulving wrote. But their consciousness is confined to a sort of timeless state — a permanent present tense, as the H.M. researcher Suzanne Corkin put it. The second requirement for episodic memory, Tulving said, is something he called autonoetic awareness, the conscious awareness of our own mental state. When Aline’s face transported me to middle school, I understood that I wasn’t actually there — that the past is different from the present, and that this is where my mental images were coming from. People with schizophrenia who hear voices or have other disturbing hallucinations are said to suffer from autonoetic agnosia, a lack of awareness that what they’re experiencing is not reality but a mental state. And the third requirement, Tulving wrote, “is that mental time travel requires a traveler. No traveler, no travel.” He was talking about a sense of self, the sense that there’s an “I,” a unique individual with a particular history. The primary reason Kent Cochrane had no episodic memories of the past and couldn’t form new ones, Tulving concluded, was that he had lost his sense of existence in time.

The same appears to be true of Lonni Sue, to a large extent. She is no longer able to visit the past mentally; she can talk about it only in a general way, as though she were consulting an encyclopedia entry about her life. She also can’t imagine the future: Ask her what she’ll be doing tomorrow and she replies, “I have to check my schedule.” She can’t remember what happened more than a few minutes ago, and without a rich memory of the past she can’t make predictions about the future. But with that printed, annotated schedule, says Aline, “she’s not floating around in time anymore.”

Losing a direct sense of yourself as having a past, present, and future doesn’t affect semantic memory, because semantic memories are unanchored to a personal sense of time. When Molaison told Corkin, “We used to go on vacations along the Mohawk Trail,” it wasn’t attached to any particular episode. It’s easy to mistake this for an autobiographical memory, not a semantic one, because we tend to talk about our past in generalities. “When you ask people about something they did in the past,” Moscovitch said, “you don’t really expect to hear a lot of detail. You want to know, ‘Did you go on vacation to France?’ ‘Oh, yeah.’ ‘Did you have a good time?’ ‘Definitely!’ ‘Did you do anything interesting?’ ‘Oh, yeah. We went boating.’” That’s good enough for most conversations. It’s not clear unless you probe further whether this is a semantic or an episodic memory. The same is true for Lonni Sue. When she says, “I used to fly an airplane” or “I drew covers for The New Yorker,” it sounds as though she might be thinking about specific incidents. It’s only when you ask, “What was the subject of your first New Yorker cover? Where were you when you found it had been accepted? How did you feel?” that you realize that she can’t come up with an answer.

Beyond that, most episodic memories tend to be converted into semantic memories with the passage of time. There really was a first time you heard that Paris is the capital of France, or that Franklin Roosevelt was president during World War II. For a short while, that might have been an episodic memory, but no more. “Just a week from now,” Moscovitch said, “if somebody asks you about the conversation we’re having right now, you’ll be able to tell them the gist of what we talked about, not the details. Two years from now you’ll maybe remember that you called me, but you won’t have a clear idea of what we talked about except that it had to do with amnesia.”

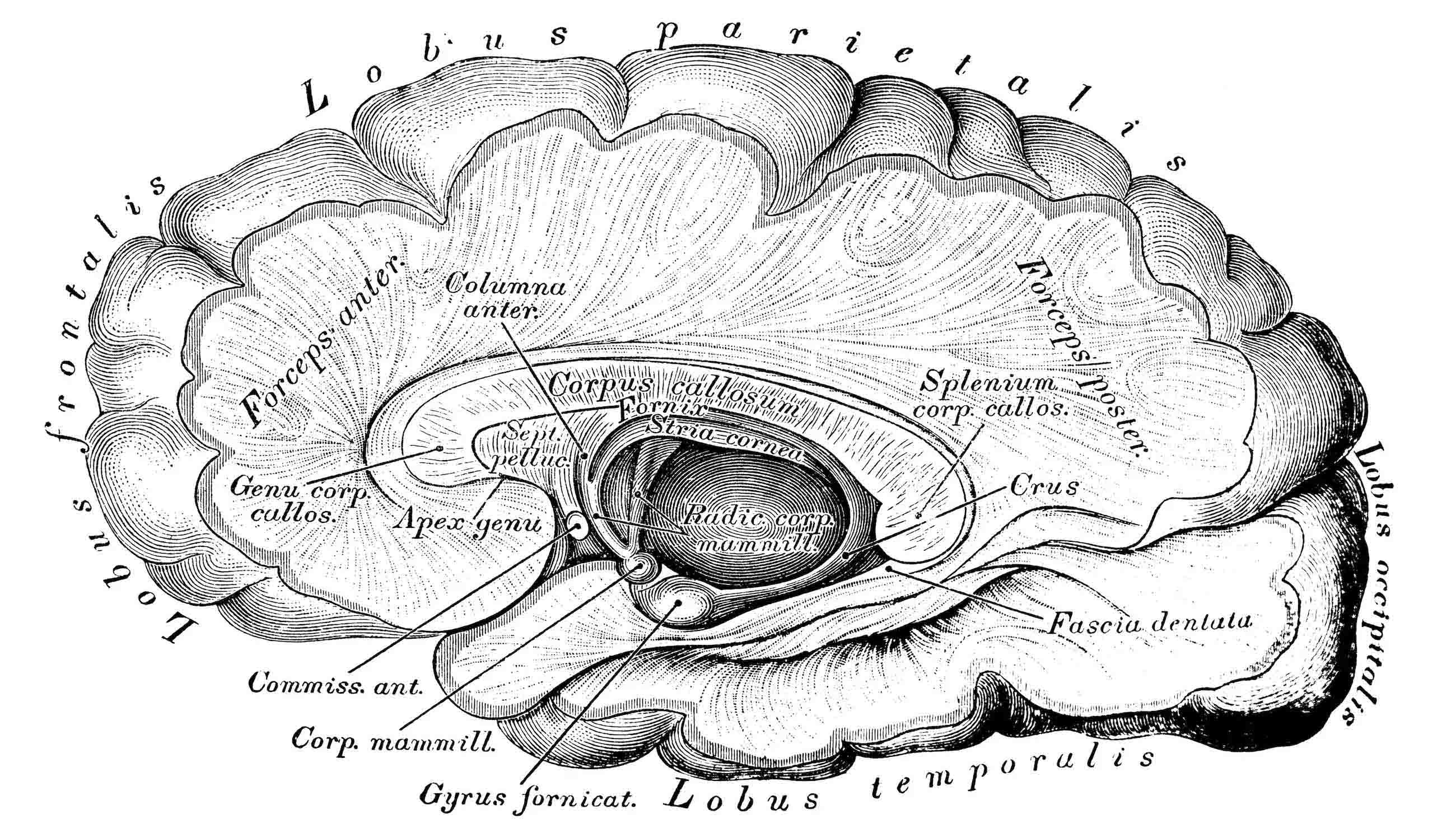

Early on, therefore, while it was clear that H.M. couldn’t form new episodic memories, it wasn’t nearly as obvious that he couldn’t access old ones. Corkin and her mentor Brenda Milner actually thought he could, at least from his childhood. As a result, neuroscientists thought for years that the hippocampus and its neighboring structures in the medial temporal lobe were involved only in forming new memories, and that once these had been formed, they were permanently there. Once the host at the cocktail party introduced the guests to one another, his or her services were no longer needed. The introduction took a while, they thought; it might be several years before a memory was fully consolidated into long-term storage. But once that was done, so was the job of the hippocampus.

The work of Tulving and Moscovitch and many other researchers, working with both humans and animals, made it clear that this wasn’t the case after all. Memories are stored throughout the brain, but for those special memories we can call up vividly, Moscovitch said, “the hippocampus is always involved in recovering and reconstructing the past.” When Corkin revisited her notes and tests, Moscovitch said, she “realized it’s true: H.M. doesn’t have any rich episodic memories of the past.”

Being unable to call up episodic memories may be embarrassing, but it’s not in itself debilitating. Being unable to form new ones is more problematic, but even that may not be the worst consequence of this particular form of brain damage.

Tulving’s great insight was that the real issue might be an inability to imagine the future. Patients with medial-temporal-lobe damage turn out to have terrible difficulty solving problems in which you have to rely on explicit memories of the past — how to get a new job, make friends in a new neighborhood, or try to make up with a lover after a breakup.

Normal people can at least come up with a strategy for doing such things. But how well you do them depends on how richly you can create a mental simulation — how vividly you can imagine a solution, based on your memories of similar situations and their outcome. “There’s a high correlation between how rich your imagination is and how well you can solve these problems,” Moscovitch said. Since human existence is more or less an exercise in continual problem-solving, the inability to access your past and to imagine your future is catastrophic. This doesn’t prevent Lonni Sue from living a contented, even joyful existence. But she can’t devise strategies for solving any but the simplest of problems. She has difficulty making decisions —wavering, for instance, between dinner and attending to her dying mother. There’s no way she can function independently in the world.

More than a half century after Henry Molaison had his brain tissue suctioned out by a surgeon trying to cure his epilepsy, neuroscientists understand that the term “memory” covers many different brain systems that work together to allow us to navigate the world. The boundaries between them aren’t always as clear as the textbooks suggest: Lonni Sue’s ability to sight-read music on the viola, or to improvise an alphabet song, or to describe in detail how you prepare and paint a watercolor, aren’t entirely declarative, but they aren’t entirely procedural either. If Tulving is right, however, episodic memory is in a special class. It is, he wrote, an “evolutionary frill,” a sort of icing, a layer that sits on top of the memory functions we share with less sophisticated animals. It looms large in our assumptions about what memory is, but it’s probably a tiny fraction of the amount we’ve learned in total. It was these other memory functions our prehuman ancestors needed for survival—procedural memory, so they could learn to use tools; statistical learning, so they could figure out where best to find food and how to avoid predators; and associative learning, so they could acquire and retain information about what plants were nutritious and what plants were poisonous. Episodic memory wouldn’t have helped them very much in the struggle to live and reproduce. But modern human society would be impossible without it.

Michael D. Lemonick is the opinion editor at Scientific American, a former senior writer for Time, and the author of six previous books. He also teaches writing at Princeton University.