In 2016, Sophie Jamal’s career took a turn for the worse. The bone researcher and physician was banned from federal funding for life in Canada after a committee found her guilty of manipulating data, presenting fabricated evidence to investigators, and blaming a research assistant for the fudged data. She was also ordered to pay back more than 253,000 Canadian dollars she had received in funding from the Canada Institute of Health Research. Then, in March 2018, she was stripped of her medical license.

Two years later, Jamal is back on the medical scene. According to The Toronto Sun, her medical license was reinstated after she presented evidence to the College of Physicians and Surgeons of Ontario showing that her misconduct was a direct result of her long-term mental health problems. She can now return to practicing as an endocrinologist, as long as she doesn’t conduct clinical research and continues therapy for her mental health problems.

Whether it’s fit for Jamal to return to treating patients is a matter for medical bodies and institutions in Canada to decide. What’s true is that scientists guilty of research misconduct often get away with nothing more than a slap on the wrist — that is, if they’re even caught in the first place. A survey of more than 1,100 researchers at eight European universities published earlier this year found that a large amount of research misconduct goes unreported, with early-career researchers the least likely to blow the whistle.

Part of the problem is the ambiguity around how to define research misconduct. The U.S. Department of Health & Human Services’ Office of Research Integrity (ORI), which oversees public health research, defines it as “fabrication, falsification, or plagiarism in proposing, performing, or reviewing research, or in reporting research results.” But other bodies and other countries have adopted different definitions, or none at all. One specialist in the area has proposed to redefine research misconduct as distorted reporting, which would cover not just the reporting of incorrect information but also the omission of vital information.

Only a small fraction of research misconduct ever comes to light. What’s more, many commonplace research misbehaviors are categorized as questionable research practices (QRPs) rather than outright misconduct. That includes publication bias, where scholarly journals favorably publish positive results over negative ones; p-hacking, where a researcher plays around with data until they meet significance thresholds; and cherry-picking, or selective reporting of data. Other QRPs include HARKing — Hypothesizing After the Results are Known, where researchers search for trends in already collected data — and publishing the same study twice.

One survey published in June notes that gift authorship, where researchers who made little or no contributions to a study are included as co-authors, is perceived to be the most common type of research fraud in the U.S. The opposite practice, ghost authorship, where worthy authors are left off an author list, is also common. A 2019 survey of just under 500 researchers found that nearly half had ghostwritten peer reviews on behalf of senior faculty. In a recent survey of junior researchers in Australia, roughly a third of the more than 600 respondents said questionable research practices of colleagues at their institution had harmed their work.

But QRPs remain a gray area for many researchers, institutions, publishers, and funders around the world. And prevailing incentives to publish as many papers as possible, especially in flashy journals, to boost one’s odds for promotion or grant funding, actively encourage these practices.

So what can be done? To start, there needs to be a clear consensus in the global science community — including publishers, universities, academics, and funders — on what constitutes research misconduct. Only then will it be possible to clearly delineate which forms of misconduct should be criminalized, and what form the punishments should take. Inevitably, decisions on specific punishments will need to be carried out on a case by case basis: Investigators will need to consider, for instance, the impact the misconduct may have had on human life, the amount of government funding that was wasted, and the motivations behind the wrongdoer’s actions. But a clear, generally accepted understanding of what constitutes misconduct will help to ensure that those judgements are fair.

The globally adopted definition of research misconduct, whatever it may be, should encompass QRPs. These infractions should be treated much more seriously and need to be reported by those who suffer from them or encounter them. Early-career researchers shouldn’t have to fear the consequences of making complaints about senior staff and universities should protect whistleblowers.

To that end, the responsibility for investigating research misconduct should shift from universities and institutions to independent governmental bodies. Although the U.S. has the ORI in place to oversee research integrity, most countries leave it to the research institutions to police their own staff. Those institutions often brush misconduct investigations under the carpet, fearing that public awareness of such issues would tarnish their reputations. Scientists found guilty of misconduct are rarely publicly named and often lightly punished. As a result, scientific fraudsters slip through the cracks, jumping from one institution to another, spreading bad practices and polluting the literature with subpar studies.

Independent governmental watchdog committees, by contrast, would likely be positioned to investigate cases with less bias and less fear of being sued. These committees should have enough teeth to be able to issue sanctions on researchers or universities as they deem necessary. The U.K. is creating a national research integrity committee, due to be launched this year, that will keep tabs on whether universities are conducting misconduct investigations robustly and appropriately. U.K. ministers have hinted that the committee could possibly be empowered to issue sanctions, but details remain scarce.

But even in the U.S. — where the ORI does have the power to sanction malfeasant researchers by naming them, shaming them, and issuing funding bans — repeat offenses happen with distressing regularity. A 2017 study of the scholarly activities of 284 academics who were found by the ORI to have committed research misconduct reported that nearly half of these researchers continued to receive federal funding after their misconduct, to the tune of more than $123 million.

In a report released this month, the European Molecular Biology Organization, a professional organization of life science researchers, outlined a number of options for addressing research fraud on the continent, including setting up a pan-European body to investigate breaches of research integrity on behalf of research institutions, funders, and journals. Such a committee would be a step in the right direction. But this is a good opportunity to better science on a grander scale. By working toward a global consensus that penalizes lesser research evils alongside more serious fraud, and by establishing independent bodies to enforce those penalties, we can signal to researchers, institutions and journals that what was until now considered business as usual will no longer be acceptable going forward.

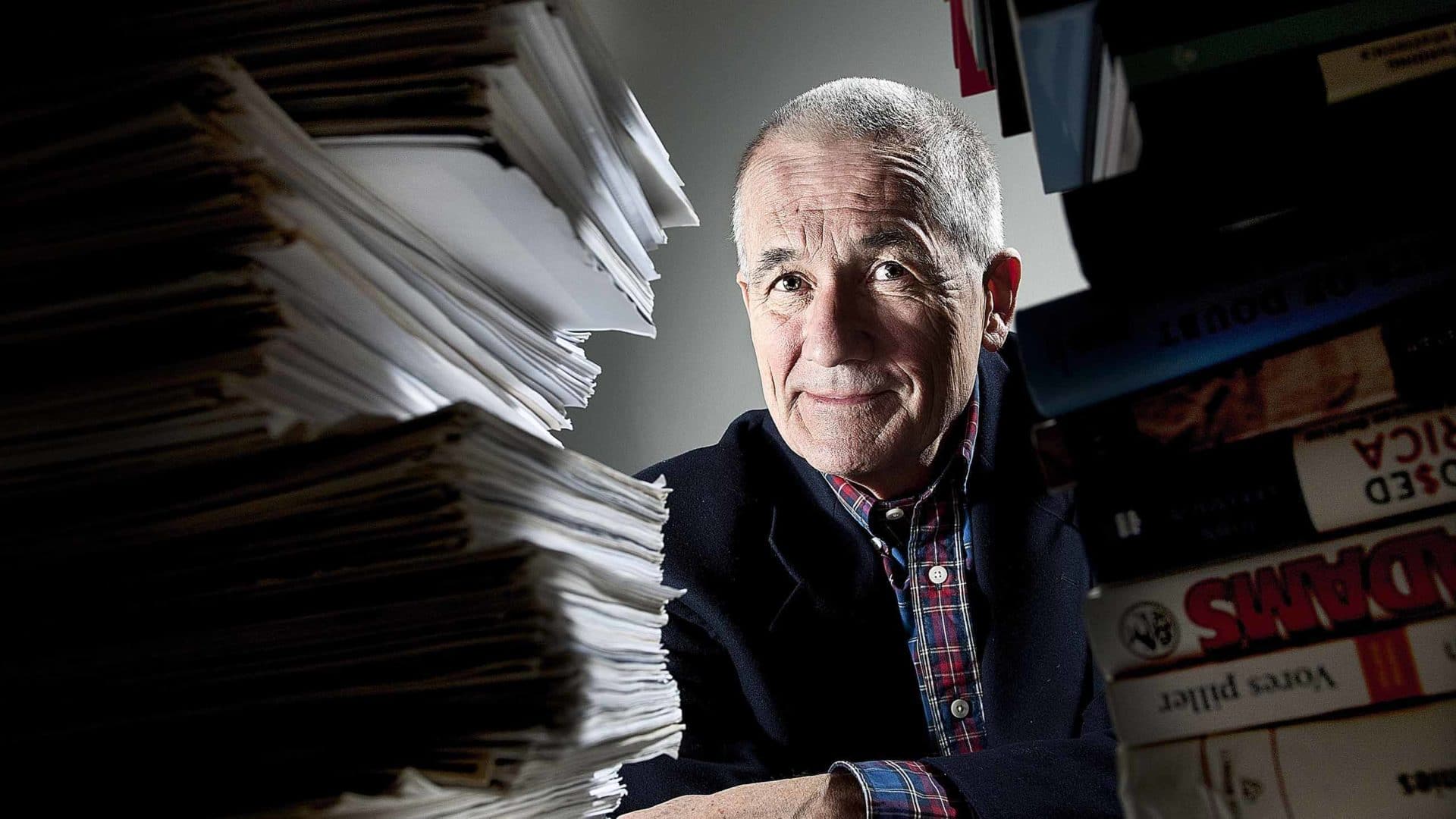

Dalmeet Singh Chawla is a freelance science journalist in London, U.K.

Comments are automatically closed one year after article publication. Archived comments are below.

Research misconduct/fraud is often the ladder on which institutional leaders (Department chairs, program directors, Deans and Chancellor ranks) ascend. They simply don’t see their actions as being nefarious. Thus, the enforcement of rules of ethics, and actionable peer-review is continuously and dynamically diluted by the foxes watching over hen coups.

In my personal experience (which has not seen the light of day despite extensive reporting to academic and oversight authorities), the nexus most often also implicates governmental authorities who are actually supposed to regulate and enforce the (?deliberately) ill-defined rules governing research misconduct and fraud.

All I can advise is that if you have a strong sense of moral duty and/or ethical standards, best bury your head in the sand instead of speaking out against institutional leaders who practice and exploit such tactics. If you speak up, it’s likely you will end up losing your career, as I did.

My experience has been that if they bring in enough money with the fraud, they’re usually given an endowed chair and are set for life.