On Neuroscience and Morality: Five Questions for Patricia S. Churchland

Patricia S. Churchland is a key figure in the field of neurophilosophy, which employs a multidisciplinary lens to examine how neurobiology contributes to philosophical and ethical thinking. In her new book, “Conscience: The Origins of Moral Intuition,” Churchland makes the case that neuroscience, evolution, and biology are essential to understanding moral decision-making and how we behave in social environments.

In the past, “philosophers thought it was impossible that neuroscience would ever be able to tell us anything about the nature of the self, or the nature of decision-making,” the author says.

The way we reach moral conclusions, she asserts, has a lot more to do with our neural circuitry than we realize. We are fundamentally hard-wired to form attachments, for instance, which greatly influence our moral decision-making. Also, our brains are constantly using reinforcement learning — observing consequences after specific actions and adjusting our behavior accordingly.

Churchland, who teaches philosophy at the University of California, San Diego, also presents research showing that our individual neuro-architecture is heavily influenced by genetics: political attitudes, for instance, are 40 to 50 percent heritable, recent scientific studies suggest.

While some critics are skeptical that neuroscience plays an important role in morality, and argue that Churchland’s brand of neurophilosophy undermines widely accepted philosophical principles, she insists that she is a philosopher, first and foremost, and that she isn’t abandoning traditional philosophical inquiry as much as expanding its scope.

For this installment of the Undark Five, I spoke with her about how we develop social values, the similarities between human and animal brains, and what is going on in the brains of psychopaths, among other topics. Our conversation has been edited for length and clarity.

Undark: You write that for years, other philosophers “bashed me for studying the brain.” What kind of resistance did you face when you brought neuroscience into the study of morality? And has that resistance changed over time?

Patricia Churchland: As a philosopher, when I started to study neuroscience, I wasn’t particularly interested in morality. I was interested in the nature of knowledge, perception, decision-making, and consciousness. The resistance at the time was that philosophers largely believed that philosophers were going to use “conceptual analysis,” or words, to figure out the scope and limits of science. And once philosophers had laid down those foundations, maybe science could do its work.

In particular, they thought it was true about the nature of the mind that there were certain things that philosophers understood that scientists — poor things! — did not understand.

Many philosophers thought it was impossible that neuroscience would ever be able to tell us anything about the nature of the self, or the nature of decision-making, or the nature of how we represent the world. They thought that my rather different approach — which was that, in the fullness of time, neuroscience was going to have a lot to say about all of these things — was not only wrong, but it was stupid, and it was undermining philosophy.

I think what has shifted is that the older guys are retiring, and the younger people can see the value of interdisciplinary work.

UD: Can you talk about how you became interested in morality, and found your way to neuroscience?

PC: Several lines of evidence conjointly convinced me that, yes, we could understand something — maybe a lot — about the neurobiological basis for moral behavior.

First, the animal studies of researchers such as Frans de Waal and Shirley Strum clearly showed that other primates were highly social, liked to be together, showed empathy for the suffering of their kin, shared food, and cooperated. Like wolves — and like us. Moreover, this was almost certainly true of ancient hominins such as Homo erectus and Homo neanderthalensis who lived in stable groups, had stone tools and the use of fire. These data strongly imply an evolutionary basis for the foundational features of social behavior in mammals.

Second, anthropologists studying existing hunter-gatherer scavenger groups showed that these groups had stable customs and a morality suited to their ecology, even though they never took a philosophy class.

The third line of evidence involved the study of neurohormones, such as oxytocin, which is central to the regulation of attachment and bonding between individuals. Though many neurochemicals play a role in social behavior, oxytocin turns out to be essential for attachment, which then ushers in a whole range of behavioral features, such as learning local norms and customs.

UD: If human brains are basically wired the same way, how is it that people reach different conclusions when it comes to moral decision-making?

PC: While it’s true that the macro-circuitry is very similar from one person to another — which is why we’re more like each other than any of us is like, say, a vervet monkey — through learning and through very deep differences in temperament we can come to different conclusions about things.

Some of us are extroverted, some are introverted, some are prone to anxiety, some are happy to take risks — and some of those temperamental features also play into how we interact socially and, hence, how we make our moral judgments.

So those things all matter as well as the experiences we have. That changes the microcircuitry, at the level of the single neuron, and how one neuron talks to another. There are really those two domains that are relevant to the differences in moral judgement.

UD: Do animals with smaller, but similar, brains to humans possess a conscience?

PC: It’s a really interesting question. Only a few years ago, many philosophers and some ethologists — people who study animal behavior — would say, “Oh no, they don’t have anything like that!”

But de Waal, a well-known ethologist, has become convinced that when we really look at the behavior of highly social mammals, we can see very clearly that there are things like feeling bad that you did a dreadful thing. Animals do feel a kind of guilt when they do something inappropriate.

There is no doubt that when a dog does something that it knows it’s really not supposed to do, and it’s caught, it does pretty much what we do — its head goes down, it hunches over, its tail goes between its legs, and it skulks off. That guilt behavior persists as it tries to reconcile or ask for forgiveness.

De Waal believes there are no unique human emotions. We share those deep emotions, because they’re part of the subcortical structures, with all mammals. Because humans have language, we may be able to give fancier nuances, but at the same time, the emotions themselves, the circuitry supplying those emotions, is basically all the same.

UD: Some people, like psychopaths, don’t exhibit the typical signs of having a conscience. What do we know by looking at their brains?

PC: The occurrence of psychopaths is deeply disturbing — and, at the same time, fascinating. It’s estimated that about 1 percent of the population has this deficit. And the deficit consists of the absence of feelings of guilt or shame or remorse, and the inability to form long-term attachments, and to form strong attachments, such as the attachments between parents and children, or between mates or friends.

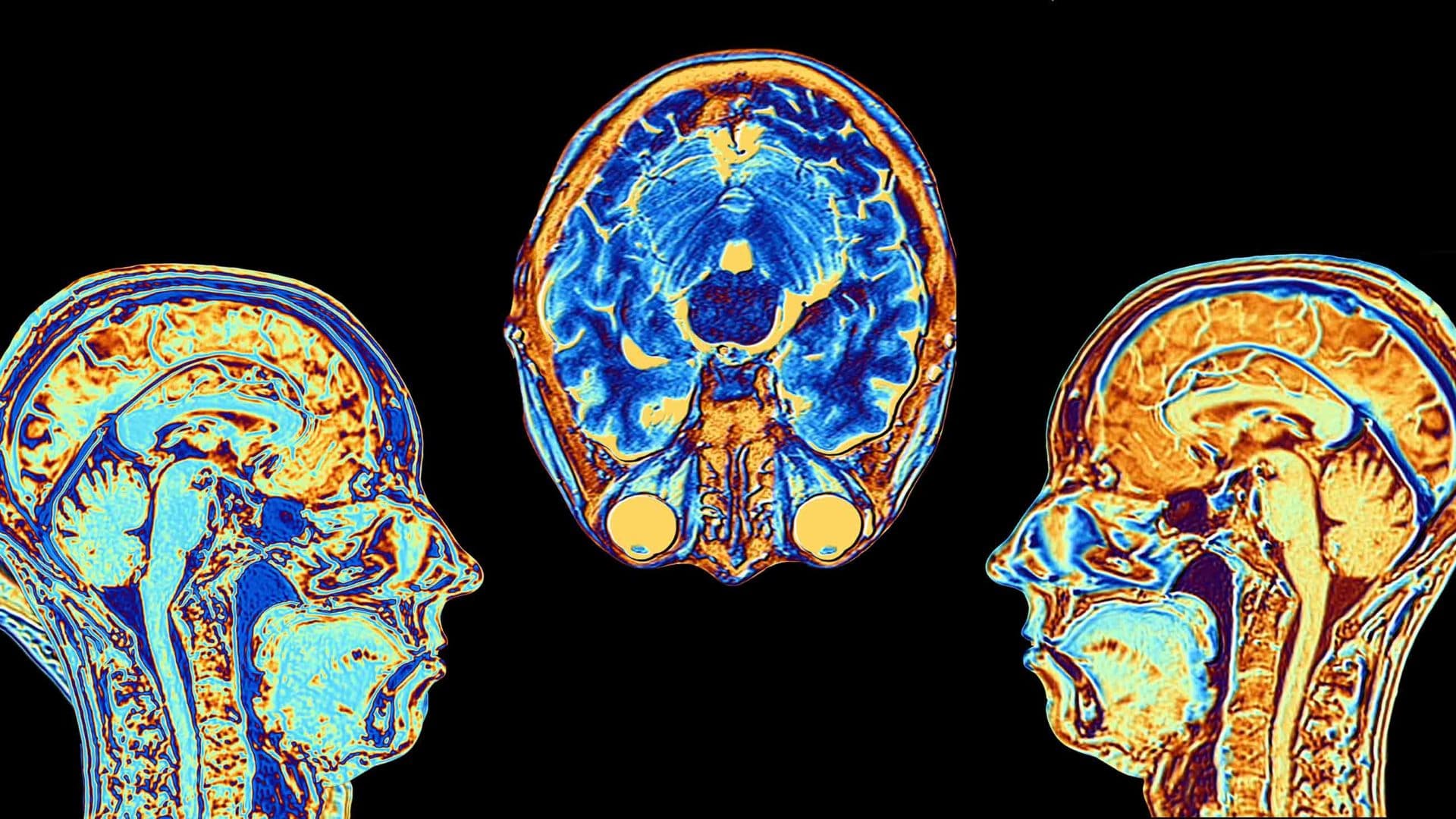

The wiring just isn’t there. What are the structures that are implicated here? We know they’re in the front of the brain and they relate to the reward system and to the emotional structures that are part of the ancient subcortical wiring.

Having said that, what does not show up when you do an MRI scan of a psychopath’s brain — you don’t see any kind of hole, you don’t even see consistent differences in the activity of very particular areas. This suggests that the difficulties are at the micro-structural level — that they have to do with individual neurons and the networks that those neurons form.

It’s going to be hard to access that level in order to understand psychopathy, but I think in the fullness of time, it will be done.

Hope Reese is a writer and editor in Louisville, Kentucky. Her writing has appeared in Undark, The Atlantic, The Boston Globe, The Chicago Tribune, Playboy, Vox, and other publications.

Comments are automatically closed one year after article publication. Archived comments are below.

PC: “The occurrence of psychopaths is deeply disturbing… …the deficit consists of the absence of feelings of guilt or shame or remorse, and the inability to form long-term attachments, and to form strong attachments,”

Are these feelings absent or are they repressed possibly by a sense of needing to maintain a defensive posture from having the concepts of hope and home broken by similarly broken authority figures? How can one double blind such a study and remove the sense of being tested when the subject is “trapped” in an MRI? If there is a chance that outlying behavior is the product of outlying experiences perceived as aggression with the intent to define and prescribe through subjugation the subjects of those prescriptions, how can we expect anything better than self fulfilling prophecy from this type of “investigation”? From my own cursory investigation of narcissism, I find most cases and articles pointing to being subjected to and subjugated by another narcissist.

Are narcissism and psychopathy neighbors in the same spectrum? I’ve noticed a tendency for the language describing these disorders to often cite “absence” of certain “healthy” emotions almost to the point of implying a complete void. It seems the can’t commit to this as these symptoms present more as merely a difference in thresholds of the levels of circumstances and not simply a perfect void but a dryer climate due to a much higher levee of scars compared to different balance of the more socially blessed norm.

We seem to exist in this world on a spectrum of a balance between nurture and challenge. We also appear to trend towards sharing whatever norm was established for ourselves in our own prescribed spaces of this spectrum. It may simply be a function of the desire to exist, to prefer to maintain a stasis of the accumulative sum of our experience to that of change regardless to the perceived polarity of any threatened, imagined, or actual change, a sense to regulate.

In Michael M.’s reply to Martha Gelarden’s comment, he cites an abundance of oxytocin in emotional states tipped both toward ecstasy and paranoid retreat. This seems consistent to a feedback mechanism designed to maintain a holding pattern over having some preconceived ideal or goal to work toward. This is the earmark of the preference to learn over that of an exclusively biased state of a set of hard targets. Obviously targets have function, such as to continue breathing, but again one must admit that this is also consistent with stasis. Even the trend we all exhibit to hoard something to some extent is consistent with and a continuation of our physical maturity sprung from microscopic subsistence. Accumulation seems to be the “practical” application of the physical realm in harmony with it’s properties.

I probably need to read Churchland’s book but I learned very little new here! Experiences modify neuron microcircuitry and this might cause differing moral decisions–huh!

the one thing that seems to my mind separates me from my dog is have more than a little self pity while my dog seems to have none.

Article stated: “Animals do feel a kind of guilt when they do something inappropriate.”

Your comment: “the one thing that seems to my mind separates me from my dog is have more than a little self pity while my dog seems to have none.”

Are you saying the dog holds an advantage here? We do get rather bogged down in this debilitating state where our four footed friends seem to have a superior momentum to their egos, if we haven’t broken them. If that is where your comment was headed, as opposed to confusing guilt with self pity, then we won’t pity the dog.

But we as humans break more than other species we deem to be inferior. Clearly the worst axiom we could ever chase is that any of us are superior in any way to any other. If we had something to teach or help another, we put that train in the sand at that point.

Sobre su libro quisiera que aclarara qué filósofos tenían ese prejuicio; su afirmació es una generalización para justificar su tesis.

About your book I would like you to clarify which philosophers had that prejudice; your assertion is a generalization to justify your thesis.

Question: Are you indicating that attachment disorders are caused by a change in the microcircuitry of the neurons? Are oxytocin levels changed, too?

We do know statistically that adoptees suffer from attachment disorders and that adoptees are 4x as likely to suffer from behavioral issues, addictions and death by suicide.

Is there a connection?

A rather brilliant and important question.

Our Neurons change their products and activities in the same general manner through which cells and bodies develop.

Stresses induce these changes, and the process/mechanisms are commonly referred to as Epigenetic, meaning that the influence, the elimination or change in gene activities are brought on from the outside.

Methylation, the stopping of a gene’s activity through a molecule attaching to cytosine, has different effects, depending on the particular gene. This is because some genes enable the activity of other genes and some disable the first types. SO it gets quite complex.

Work on epigenetics first explored development – you only grow so much, and then very precisely stop in response to such signals (there are several forms of epigenetic marking, and a number of other places in the road from DNA to the structures and changes to direct modification in a body, and a comment can’t clear up the relatively new science).

We are just these past very few years beginning to work on this root of cognitive and behavioral change.

there are other brain cells that guide and control neurons, as well, to step up into the connectivity differences you intuit and have experienced. Dr. Churchland has a huge job in keeping up on these basic things that make each individual unique in all time, as, if my comment has been successful in touching complexity, you can see that through such factors as Histone acetylation, which lifts specific areas of DNA off their protein “spools”, and the deacetylation compounds which allow them to settle back down into inactivity, are numerous, promoted in many ways – there’ll NEVER be a simple explanation for the exact process in a living mind.

We can naturally predict and learn, and understand others – that is the basic need of even the smallest single celled creatures that move.

Even bacteria and plants have long evolved useful responses to their own kinds, as well as to others, which might not be healthy for them, and so are best countered.

We humans can speak, report, hypothesize, using the information gained by others. It will surprise some that even bacteria “learn” by trading genetic material with others, even of different species.

As huge as we are in comparison, we still use some of the very same molecules through which information is exchanged as the smallest of living things.

Both reliance on our natural common senses (in all the meanings of the term) and through neuroscience, we may begin to understand the induction of the active compounds like hormones and neurotransmitters (and modulators which allow clearer coherent neural activity to occur), of thought, memory, behavior, response.

A note on oxytocin (OT): While increases are associated with feelings of love and attachment toward those with whom we are familiar, and perhaps nostalgia in conditions of absence, it is also notorious for its association with the opposite feelings toward those we consider outgroup feeling enmity and rejection toward the latter individuals or aggregations we create in our minds. (OT nasal-spray cognitive research has shown this) this is an important consideration when experiencing your emotions, to understand in a general fashion. Everything we feel has consequences, and we DO have to recognize that the efficiency of nature occurs due to having useful value.

There’s a LOT of information relevant to adoptees not commonly reported, but the implicit signals one receives nonverbally are overt, and the deceptions of human symbolic verbal language cannot cover the truth of pheromonal and hormonal signaling. Yet, unlike other animals who are rightly more wary of us, we may misinterpret such cues due to our learned and very personal social stress variables. We have to look into every response we experience in ourselves, to discover more about our own presumptions.

One more thing, both a caution of complexity (evolutionary scientists are more and more discovering that less and less of anything is “accidental” than was previously believed, but interlinked in responsive vast whole systems) and optimism:

We are NEVER in stasis, the mind – any neural system – makes associations with previously gained knowledge (or mistaken beliefs) with every new bit of sensory and motor information that has occurred while a living mind is awake.

Change is life. Even your most permanent-seeming bone is utterly renewed within 2 to 10 years Depending on the bone). All living things are constantly shedding that which is no longer useful or is worn, and your brain does this sometimes lightning-fast.