The Ethical Quandary of Human Infection Studies

In February of last year, 64 healthy adult Kenyans checked into a university residence in the coastal town of Kilifi. After a battery of medical tests, they proceeded, one by one, into a room where a doctor injected them with live malaria parasites. Left untreated, the infection could have sickened or even killed them, since malaria claims hundreds of thousands of lives every year.

But the volunteers — among them casual laborers, subsistence farmers, and young mothers from nearby villages — were promised treatment as soon as infection took hold. They spent the next few weeks sleeping, eating, and socializing together under the watchful eye of scientists, giving regular blood samples and undergoing physical exams. Some grew sick within a couple of weeks, and were treated and cleared of the parasite before being sent home. Those who did not fall ill were treated after three weeks as a precaution and discharged, too.

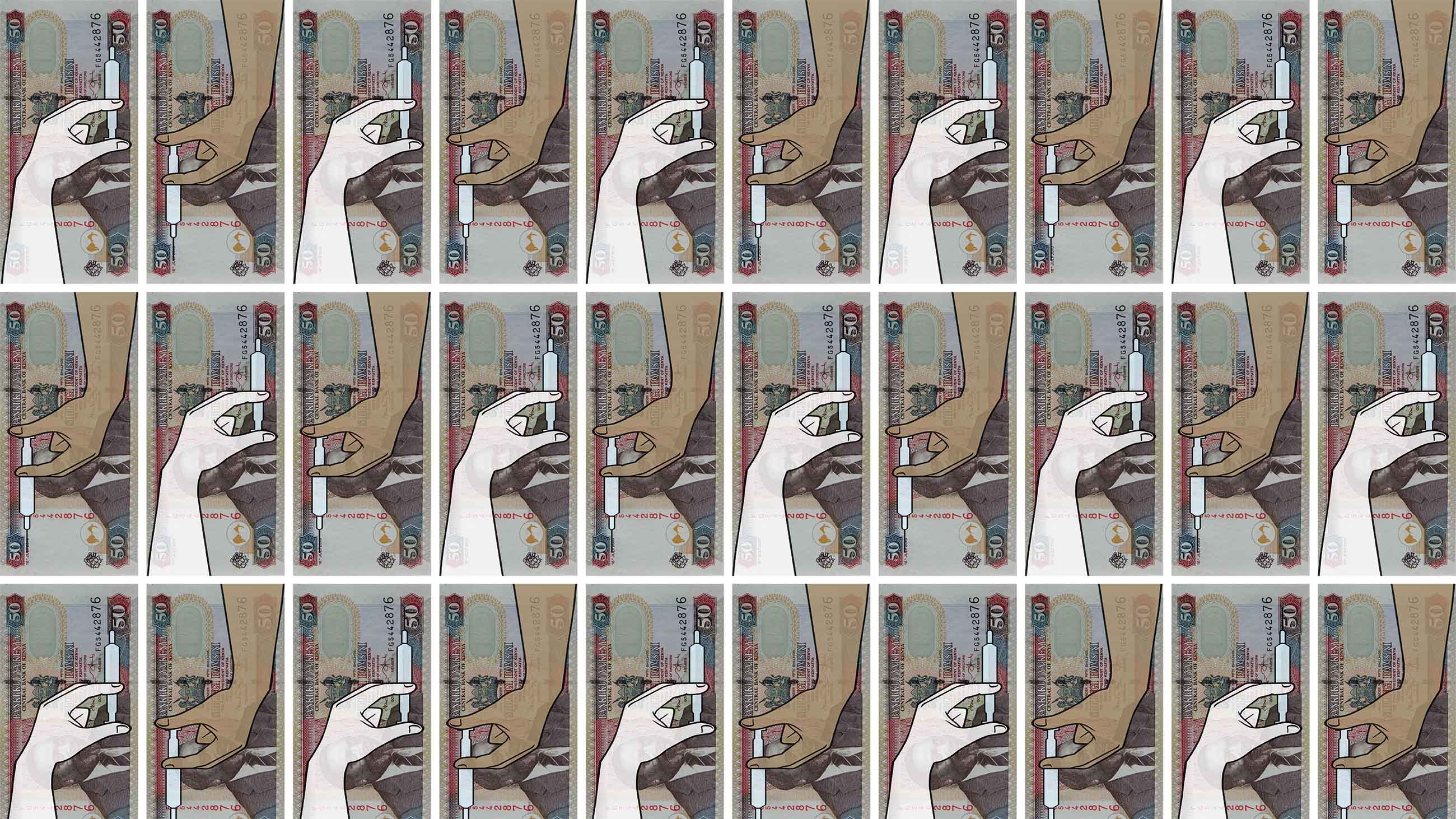

As compensation, the volunteers received between $300 and $480 each, or roughly $20 a day, a rate based on the minimum wage for casual laborers in Kenya and the out-of-pocket allowance set for overnight stays by KEMRI, the Kenya Medical Research Institute.

Human infection studies like this one, also referred to as human challenge studies, in which scientists expose people to an infectious disease in order to study it or to test new drugs, have long taken place in wealthy nations where results can be carefully tracked and patients easily monitored. But now there is a push to carry them out on the ground in developing countries like Kenya, Mali, and Thailand, closer to suffering populations and real-world conditions.

And as they expand the range of human infection studies, researchers are confronting a new set of ethical concerns over acceptable levels of risk and compensation for participants who often live in poor communities with limited educational opportunities. Last month, two major medical research funders — the Wellcome Trust and the Bill and Melinda Gates Foundation — issued guidelines aimed at protecting participants in such studies.

“We are thinking about our responsibility as funders,” says Charlie Weller, head of the Wellcome Trust’s vaccine program. “After all, you are infecting a healthy person with a pathogen.”

The guidelines state that, “It is important to recognize the potential risks that participants, communities, scientists, and funders assume when they engage in human infection studies and take appropriate measures to minimize and mitigate those risks.” But, it adds, “it is equally important to understand the potential benefits that derive from these studies, and ensure that those benefits are maximized and equitably distributed, particularly amongst disease endemic communities.”

Whether the new guidelines are enough to protect the interests of poor populations, for whom the promise of financial reward for participation in such studies might constitute an undue influence, however, remains an open question. “Payment could be a very important issue to consider in the developing world context, since volunteers are likely to have low socioeconomic status,” says David Resnik, a bioethicist with the National Institute of Environment Health Sciences based in Durham, North Carolina, “and therefore, a payment that would not be viewed as excessive in a developed country might be in a developing one.”

On the surface, voluntary infection research seems to contradict the first principle of medicine: do no harm. Yet the studies have had a long and checkered history, and have at times led to valuable medical breakthroughs.

In 1796, Edward Jenner rubbed fluid from a cowpox blister into the skin of a healthy 8-year old, James Phipps, on the hunch that it would protect him against smallpox. It did, and the discovery gave rise to vaccines, which have saved millions of lives. But there’s a dark side as well: Nazis gave concentration camp prisoners jaundice during World War II, and U.S. Army doctors infected Filipino prisoners with plague in the early 1900s.

Jenner’s work — not to mention research forced on prisoners — would not be allowed today. The Nuremberg Code, developed after World War II, requires that participation in human studies be voluntary, with a complete understanding of the study’s purpose and risks. This principle, known as informed consent, is a cornerstone of modern medical research and needs to be observed for all studies, including those that infect volunteers. The Nuremberg Code also states that the risk of a study must be proportional to the humanitarian benefits it is expected to bring.

Because they are controversial, human infection studies face extra scrutiny from bodies that provide ethical oversight of medical research. For example, the studies can only be done on diseases that have a reliable cure; chronic diseases like HIV are out of bounds. And they cannot be used if less risky research could yield the same results.

Nonetheless, such studies have grown in number and scope over the last few decades. A recent count on the international registry clinicaltrials.gov turned up at least 155 completed, ongoing, and forthcoming studies involving voluntary infection with diseases including hookworm, typhoid, cholera, schistosomiasis, tuberculosis, and dengue. But only 12 were carried out in developing countries, even though that’s where these diseases pose the biggest threat.

“Right now, not many studies take place in low- and middle-income countries, yet that’s where the real value would be,” says Claudia Emerson, a philosopher who specializes in ethics and innovation at McMaster University in Ontario, and one of the people behind the new funders’ guidelines.

The scientific case for conducting human infection studies in disease-endemic areas is clear, says Weller at the Wellcome Trust. People there often respond differently to infection — and treatment — than their counterparts in wealthier countries. For example, many Kenyans already have some form of natural resistance to malaria. They might also have other co-morbidities, such as HIV or malnutrition, which can influence how their bodies respond to infection. So, drugs developed in the United States, say, on white well-nourished volunteers, run the risk of being less effective in the populations who actually need them.

For a long time, scientists felt uneasy about performing studies in countries where poverty and low literacy levels might make participants more vulnerable to exploitation. But increased investment in medical research in developing nations has helped to allay such concerns. In Kenya, where the Wellcome Trust has funded research for three decades, scientists feel they have built sufficient trust with surrounding communities.

“There’s now no reason not to do it here,” says Philip Bejon, a British immunologist who runs the KEMRI-Wellcome Trust Research Program, which is conducting the Kilifi study.

The researchers hope to tease out why some Kenyans are able to control the malaria parasite — either partly or wholly — while others cannot. Such insights could lead to the development of promising new vaccines, while demonstrating that human infection studies can be done safely and reliably in remote low-income areas, Bejon says.

Getting the study off the ground took careful planning. Its protocols had to be carefully designed to uphold the principle of informed consent. Working with ethicists and community liaison officers, the scientists first approached community chiefs and headmen to seek their permission. The scientists then held barazas, or information meetings, in each recruitment site, explaining the methodology and goals of the research. Afterward, participants underwent a further consent process, which involved two more information sessions.

By the time the volunteers gave consent, “they have heard about the study three to four times,” says Melissa Kapulu, one of the trial’s leaders. However, early on the researchers became concerned about the surplus of volunteers, since recruitment is often a hurdle in clinical studies in developing nations, even when they don’t involve deliberate infection. Concerned about this over-enthusiastic response, the team enlisted social scientists to investigate the motivation driving the second group of volunteers — 36 of the 64 who enrolled in the February study.

They discovered that the main incentive was financial. One 32-year-old woman said she was going to use the money to enroll her son in secondary school. A younger woman said that even though she might make more money per day selling clothes at the market, her business was unpredictable. “If I manage to stay for those 24 days,” or the longest enrollment time the study would allow, “I will have gotten a lot more money than going to the market,” she said.

The extensive medical check-ups were also a draw for some volunteers, as was the desire to help scientists with their work.

The findings surprised Dorcas Kamuya, a Kenyan bioethicist and one of the social scientists evaluating the participation. The compensation levels had been carefully calibrated to reimburse volunteers for their extensive time away from work and families, she says. “I thought, this is so little when we are asking so much of them,” she says.

But setting appropriate payment levels remains one of the thorniest ethical challenges for studies in poor countries. A workshop held last year in Entebbe, Uganda, on the creation of a human infection site in the country for schistosomiasis — a parasitic disease carried by freshwater snails — pointed out that “almost any payment may be an inducement in Ugandan settings.”

On the other hand, offering volunteers no money at all is also problematic, says Emerson of McMaster University. “The vast majority of people would not want to be in a study if you experience something and not receive anything in return,” she says. She hopes the new guidelines will encourage funders to become more open about their experiences in running volunteer infection studies — including how to best judge compensation rates. “It’s not that this knowledge doesn’t exist — we just haven’t done a good job of sharing it,” she says.

One reason why such knowledge isn’t widely shared might be because of potentially troublesome headlines. When Kamuya and her colleagues published their research on motivations for joining the Kilifi trial earlier this year, a Kenyan newspaper ran an article under the headline “Want Cash? Volunteer for a Dose of Malaria Parasite.” Some people took to Twitter to ask how they could sign up, while others accused the scientists of unethical behavior.

The story infuriated Kamuya, who felt it played into an old trope: “The way they framed it tended towards a storyline that has been perpetuated over the years,” that research done in Africa is conducted by westerners in an exploitative manner. If the article had mentioned that most of the scientists working on the project were actually African, she added, she doubts there would have been nearly as much concern: “They didn’t take the time to find out who the researchers were.’’

In fact, Kamuya believes there can be greater ethical justification for doing these studies in developing countries due to their need for new, effective drugs. However, she warns, the benefits have to be fairly immediate in order to justify the risks. A potential vaccine in 30 years’ time might not be enough, she says. “If you don’t have an assurance that there will be quick benefits you might have to rethink whether challenge studies are the way to go.”

Linda Nordling is a journalist based in Cape Town, South Africa, who covers science, education, medicine, and development with a focus on Africa. She can be reached on Twitter at @lindanordling.

Comments are automatically closed one year after article publication. Archived comments are below.

Thanks for this article – some of the information I did not know.

Please have a look at the debate in India on the CHIM studies here – http://ijme.in/issues/anticipating-new-frontiers/